I had the chance this year to meetup with my client Thomas Dowson from “Archaeology Travel Media” at the Travel Innovation Summit in Seville.

Over the past 2 years we have been revamping all the content from archaeology-travel.com and integrated a sophisticated travel itinerary builder system into the mix. We are almost feature complete and are currently fine-tuning the system. New explorers are welcome to signup and testdrive our set of unique features.

It was so nice to finally meet the whole team in person and celebrate what we have accomplished together so far.

Directly taken from the front-page :)

“EXPLORE THE WORLD’S PASTS WITH ARCHAEOLOGY TRAVEL GUIDES, CRAFTED BY EXPERIENCED ARCHAEOLOGISTS & HISTORIANS

Whatever your preferred style of travel, budget or luxury, backpacker or hand luggage only, slow or adventure, if you are interested in archaeology, history and art this is an online travel guide just for you.

Here you will find ideas for where to go, what sites, monuments, museums and art galleries to see, as well as information and tips on how to get there and what tickets to buy.

Our destination and thematic guides are designed to assist you to find and/or create adventures in archaeology and history that suit you, be it a bucket list trip or visiting a hidden gem nearby.”

More Details

About

Mission & Vision

Code of Ethics

We are constantly expanding our set of curated destinations, locations and POIs. Our plan is it, to make it even easier to find unique places for your next travel experience.

We are also working on partnerships to enhance travel options and offer a even broader variety of additional content.

Looking forward to all the things to come, as well as to the continued exceptional collaboration between all team members.

First a bit of context :)

Translation within WordPress is based of Gettext. Gettext is a software internationalization and localization (i18n) framework used in many programming languages to facilitate the translation of software applications into different languages. It provides a set of tools and libraries for managing multilingual strings and translating them into various languages.

The primary goal of Gettext is to separate the text displayed in an application from the code that generates it. It allows developers to mark strings in their code that need to be translated and provides mechanisms for extracting those strings into a separate file known as a “message catalog” or “translation template.”

The translation template file contains the original strings and serves as a basis for translators to provide translations for different languages. Translators use specialized tools, such as poedit or Lokalize, to work with the message catalog files. These tools help them associate translated strings with their corresponding original strings, making the translation process more manageable.

At runtime, Gettext libraries are used to retrieve the appropriate translated strings based on the user’s language preferences and the available translations in the message catalog. It allows applications to display the user interface, messages, and other text elements in the language preferred by the user.

Gettext is widely used in various programming languages, including C, C++, Python, Ruby, PHP, and many others. It has become a de facto standard for internationalization and localization in the software development community due to its flexibility and extensive support in different programming environments.

WordPress uses dedicated language files to store translations of your strings of text in other languages. Two formats are used:

There has been a discussion for years, if it makes sense to cache mo-files, to speedup WordPress when multiple languages are in use. Discussion of the WordPress Core team.

There have been a couple of plugins trying to fix this and prevent reloading of language files on every pageload.

Most of these use transients in your database and object caches if active.

Well it all depends on the amount of language files and your infrastructure. This load/parse operation is quite CPU-intensive and does spend quite a significant amount of time.

I have decided, not to bother with it in the past :) But I am always looking for ways to speed up multi-lingual websites, as that is my daily bread & butter ;) Checkout Index WP MySQL For Speed & Index WP Users For Speed, which optimizes the MySQL Indexes for optimal speed. That change, really does make a bit difference!

One thing that can help to speed up things, is to use the native gettext extension and not the WordPress integration of it. This will indeed help speedup translation processing and help big multilingual websites.

Native Gettext for WordPress by Colin Leroy-Mira, provides just that.

Create a must use plugin and add this:

|

1 2 3 |

<?php /** Plugin Name: Disable Gettext */ add_filter('override_load_textdomain','__return_true'); |

This will prevent any .mo file from loading.

Please consider the performance impact. Read about it at the main documentation.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

<?php /** * Change text strings * * @link http://codex.wordpress.org/Plugin_API/Filter_Reference/gettext */ function my_text_strings( $translated_text, $text, $domain ) { switch ( $translated_text ) { case 'Sale!' : $translated_text = __( 'Clearance!', 'woocommerce' ); break; case 'Add to cart' : $translated_text = __( 'Add to basket', 'woocommerce' ); break; case 'Related Products' : $translated_text = __( 'Check out these related products', 'woocommerce' ); break; } return $translated_text; } add_filter( 'gettext', 'my_text_strings', 20, 3 ); |

Enjoy ….

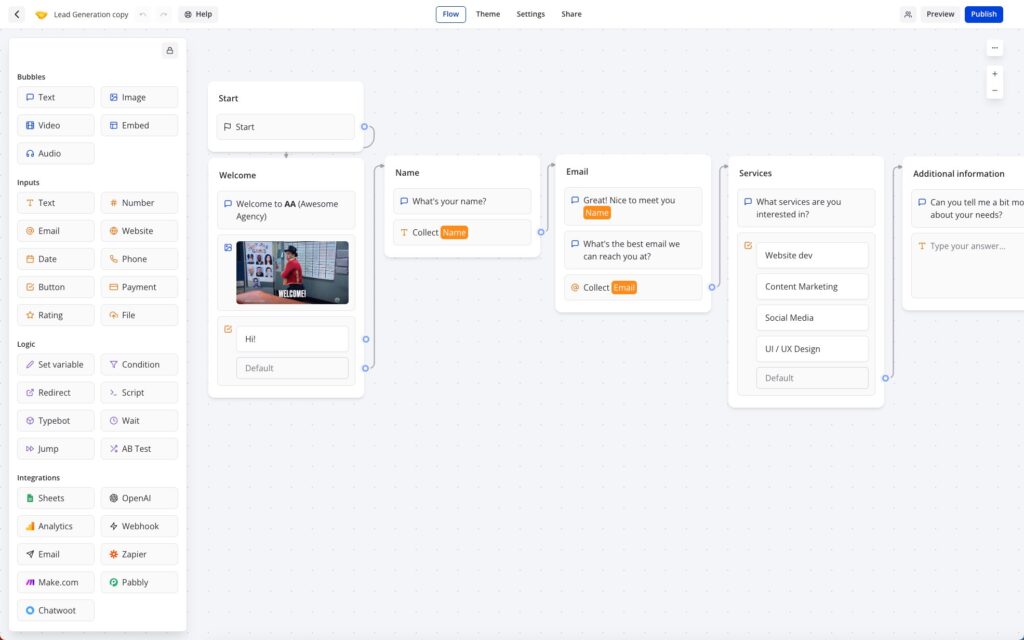

Typebot is a free and open-source platform that lets you create conversational apps and forms, which can be embedded anywhere on your website or mobile apps.

It provides various features like text, image, and video bubble messages, input fields for various data types, native integrations, conditional branching, URL redirections, beautiful animations, and customizable themes.

You can embed it as a container, popup, or chat bubble with the native JS library and get in-depth analytics of the results in real-time.

Typebot can be used in the Cloud (free tier available) or installed via Docker.

It drag & drop builder makes it amazingly fast to build your personal chatbot. It provides a ton of integrations (Google Analytics, Google Sheets, OpenAI, Zapier, Webhooks, Email, Chatwood) out of the box or you can connect other things via your own API Requests.

I have been a longtime user of RocketChat and build my own chatbots using Hubot or BotPress.

RocketChat

“Rocket.Chat is a free and open-source team chat platform that enables real-time communication between team members. It provides team messaging, audio and video conferencing, screen sharing, file sharing, and other collaboration features. It offers a familiar chat interface that makes it easy for teams to stay connected and collaborate effectively.

Rocket.Chat is highly customizable and extensible, with a large number of community-contributed plugins and integrations. It supports multiple platforms, including web, desktop, and mobile, making it accessible to teams regardless of their device or location. It also offers end-to-end encryption for secure communication, and compliance with various industry regulations such as HIPAA, GDPR, and others.”

Hubot

“Hubot is a popular open-source chatbot framework developed by GitHub. It allows developers to build and deploy custom chatbots that can automate tasks, integrate with various APIs, and enhance team communication and collaboration.

Hubot is built on Node.js and can be extended with various plugins and scripts, making it highly customizable and flexible. It comes with many built-in plugins, including integration with popular chat platforms like Slack, HipChat, and Campfire, as well as various APIs like Twitter, GitHub, and Google.

Hubot enables developers to build chatbots that can perform various tasks, such as scheduling meetings, deploying code, fetching data, and responding to user queries. It also provides a command-line interface for managing and interacting with the chatbot.”

Botpress

“Botpress is an open-source platform that allows you to build and deploy chatbots and conversational interfaces. It provides a user-friendly interface and a visual flow editor that allows you to create and manage your chatbot’s conversation flow. Botpress comes with many pre-built components and integrations, including natural language processing (NLP) engines, chat widgets, and webhooks, making it easy to build complex chatbots with advanced functionality.

Botpress offers features like machine learning-based NLP, conversational analytics, multi-language support, customizable themes, and a modular architecture that allows you to add custom functionalities with ease. It supports multiple channels, including web, Facebook Messenger, Slack, and WhatsApp, allowing you to reach your audience wherever they are.”

Typebot beats Botpress and could be the reason why I might be skipping RocketChat as well. Not sure I want to build an integration for RocketChat myself.

Base YAML Docker-Compose File to get you started. This will setup a Postgres instance, the typebot builder and typebot viewer.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

version: '3.3' services: typebot-db: image: postgres:13 restart: always volumes: - db_data:/var/lib/postgresql/data environment: - POSTGRES_DB=typebot - POSTGRES_PASSWORD=typebot typebot-builder: image: baptistearno/typebot-builder:latest restart: always depends_on: - typebot-db ports: - '8080:3000' extra_hosts: - 'host.docker.internal:host-gateway' # See https://docs.typebot.io/self-hosting/configuration for more configuration options environment: - DATABASE_URL=postgresql://postgres:typebot@typebot-db:5432/typebot - NEXTAUTH_URL=<your-builder-url> - NEXT_PUBLIC_VIEWER_URL=<your-viewer-url> - ENCRYPTION_SECRET=<your-encryption-secret> - ADMIN_EMAIL=<your-admin-email> typebot-viewer: image: baptistearno/typebot-viewer:latest restart: always ports: - '8081:3000' # See https://docs.typebot.io/self-hosting/configuration for more configuration options environment: - DATABASE_URL=postgresql://postgres:typebot@typebot-db:5432/typebot - NEXT_PUBLIC_VIEWER_URL=<your-viewer-url> - ENCRYPTION_SECRET=<your-encryption-secret> volumes: db_data: |

I am a huge Docker fan and run my own home and cloud server with it.

“Docker is a platform that allows developers to create, deploy, and run applications in containers. Containers are lightweight, portable, and self-sufficient environments that can run an application and all its dependencies, making it easier to manage and deploy applications across different environments. Docker provides tools and services for building, shipping, and running containers, as well as a registry for storing and sharing container images.

With Docker, developers can package their applications as containers and deploy them anywhere, whether it’s on a laptop, a server, or in the cloud. Docker has become a popular technology for DevOps teams and has revolutionized the way applications are developed and deployed.”

I am always looking for new ways to document the tools I use. This might help others to find interesting projects to enhance their own work or hobby life :)

I will have multiple series of this kind. I am starting with Docker this week, as it is at the core / a hub for many things I do. I often testdrive things locally, before deploying them to the cloud.

I am not concentrating on the installation of Docker itself, there are so many articles about that out there. You will have no problem to find help articles or videos detailing it for your platform.

Docker Compose and Docker CLI (Command Line Interface) are two different tools provided by Docker, although they are often used together.

Docker CLI is a command-line interface tool that allows users to interact with Docker and manage Docker containers, images, and networks from the terminal. It provides a set of commands that can be used to create, start, stop, and manage Docker containers, as well as to build and push Docker images.

Docker Compose, on the other hand, is a tool for defining and running multi-container Docker applications. It allows users to define a set of services and their dependencies in a YAML file and then start and stop the entire application with a single command. Docker Compose also provides a way to manage the lifecycle of the containers as a group, including scaling up and down the number of containers.

I prefer the use of Docker Compose, as it makes it easy to replicate and tweak a setup between different servers.

There are tools like $composerize, which allow you to easily transform a CLI command into a composer file. Also a nice way to easily combine multiple commands into a clean configuration.

Portainer is an open-source container management tool that provides a web-based user interface for managing Docker environments. With Portainer, users can easily deploy and manage containers, images, networks, and volumes using a graphical user interface (GUI) instead of using the Docker CLI. Portainer also provides features for monitoring container and system metrics, creating and managing container templates, and configuring and managing Docker Swarm clusters.

Portainer is designed to be easy to use and to provide a simple and intuitive interface for managing Docker environments. It supports multiple Docker hosts and allows users to switch between them easily from the GUI. Portainer also provides role-based access control (RBAC) to manage user access and permissions, making it suitable for use in team environments.

Portainer can be installed as a Docker container and can be used to manage both local and remote Docker environments. It is available in two versions: Portainer CE (Community Edition) and Portainer Business. Portainer CE is free and open-source, while Portainer Business provides additional features and support for enterprise users.

Portainer is my tool of choice, as it allows to create stacks. A stack is a collection of Docker services that are deployed and managed as a single entity. A stack is defined in a Compose file (in YAML format) that specifies the services and their configurations.

When a stack is deployed, Portainer creates the required containers, networks, and volumes and starts the services in the stack. Portainer also monitors the stack and its services, providing status updates and alerts in case of issues or failures.

As I said, its important for me to easily transfer a single container or stack to another server. The stack itself can be easily copied and reused. But do we easily export the setup of a current single docker file into a docker-compose file?

docker-autocompose to the rescue! This docker image allows you to generate a docker-compose yaml definition from a docker container.

|

1 |

docker pull ghcr.io/red5d/docker-autocompose:latest |

Export single or multiple containers

|

1 |

docker run --rm -v /var/run/docker.sock:/var/run/docker.sock ghcr.io/red5d/docker-autocompose <container-name-or-id> <additional-names-or-ids>... |

Export all containers

|

1 |

docker run --rm -v /var/run/docker.sock:/var/run/docker.sock ghcr.io/red5d/docker-autocompose $(docker ps -aq) |

This has been a great tool to also quickly backup all relevant container information. Apart from the persistent data, the most important information to quickly restore a setup if needed.

Backup , backup … backup! Learned my lesson, when it comes to restoring docker setups ;) Its so easy to forget little tweaks you did to the setup of a docker container.

Starting tomorrow …

BookStack is an open-source, web-based platform for organizing, storing, and sharing knowledge and documentation. It was developed to provide a user-friendly and intuitive interface for creating and managing knowledge bases, wikis, and other types of structured content.

BookStack allows users to create books, chapters, and pages, and to organize them hierarchically. Users can also add images, files, and links to their content, and collaborate with others by assigning roles and permissions. BookStack also supports full-text search, version control, and commenting, making it easy to find and update information.

BookStack is written in PHP and uses the Laravel framework, and it is available for free under the MIT License.

This is my internal homelab documentation system, I use it for private and client related content. Bookstack is fast, clean and well documented. It has really elevated the way I store and access important reminders / how-tos and documentation.

The system provides API access and has many ways to tweak it. Bookstack documentation.

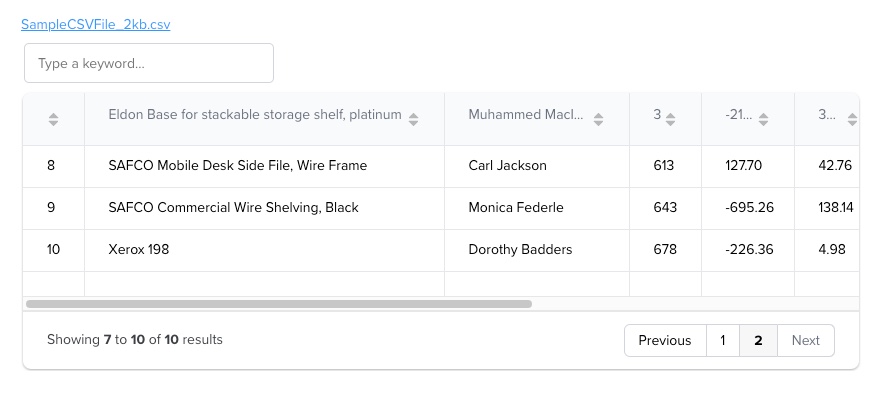

CSV stands for “Comma-Separated Values”. It is a simple file format used to store tabular data, such as spreadsheets or databases, in plain text. In a CSV file, each line represents a row of data, and each column is separated by a comma (or sometimes a semicolon or tab).

There are always CSV exports or other data stored in CSV format, that i need quick access to sometimes.

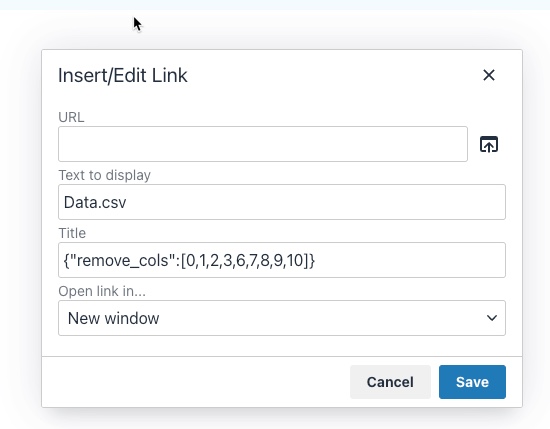

Bookstack allows to add files to pages and insert links to them, but it does not embed or parse those files in any way. There are similar hacks to mine, this example allows to embed PDFs and here the main Hacks page.

We need something to parse the CSV file and something to display the information in a nice visual & flexible grid.

Included and linked CSV files look something like that in source.

|

1 2 3 |

<p id="bkmrk-samplecsvfile_2kb.cs"> <a href="https://bookstack.instance/attachments/12" target="_blank" rel="noopener">SampleCSVFile_2kb.csv</a> </p> |

The settings allow you to add custom code to your instance. Another option would be to tweak template files, but its easier to do these light tweaks using the header customizer.

This can be found under: https://bookstack.instance/settings/customization

Either link them externally or better use locally stored versions of these.

|

1 2 3 |

<script type="text/javascript" src="https://cdn.jsdelivr.net/npm/papaparse@5.4.0/papaparse.min.js"></script> <script src="https://unpkg.com/gridjs/dist/gridjs.umd.js"></script> <link href="https://cdn.jsdelivr.net/npm/gridjs/dist/theme/mermaid.min.css" rel="stylesheet" /> |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 |

<script> function init() { document.querySelectorAll('.page-content a').forEach((node, i) => { let parent = node; let unique = i; let text = node.textContent let link = node.href if (text.search(".csv") !== -1) { let header; let rows = []; let json = JSON.parse(node.getAttribute("title")); Papa.parse(link, { download: true, header: false, complete: function(results) { results.data.map(function(data, index) { if(!!json){ let removeValFrom = json.remove_cols; data = data.filter(function(value, index) { return removeValFrom.indexOf(index) == -1; }) } if (index == 0) { header = data; } else { rows.push(data); } }); const csv_wrapper = document.createElement("div"); csv_wrapper.setAttribute("id", "csv_wrapper_" + unique_id); parent.after(csv_wrapper); new gridjs.Grid({ search: true, columns: header, data: rows, fixedHeader: true, sort: true, pagination: { limit: 6 } }).render(document.getElementById("csv_wrapper_" + unique_id)); } }); } }); } window.addEventListener('DOMContentLoaded', init); </script> |

Github: Will be adding more hacks, as I clean some of them up :)

You can use the title attribute to pass some config data, in JSON format. Currently only to strip columns, but will extend that a bit more for flexible usage ;)

|

1 |

{"remove_cols":[0,1,2,3,6,7,8,9,10]} |

Should look something like that …

Enjoy coding …

“Übersicht” is a German word that means “overview” or “summary” in English. The name of the software was chosen because it allows users to have a quick overview of various information on their desktop. It’s also a play on words, because in German the name of the software can be translated as “super sight” which refers to the ability to have a lot of information at a glance.

Übersicht is a lightweight and powerful application that allows users to create and customize desktop widgets using web technologies such as HTML, CSS, and JavaScript. With Übersicht, you can create widgets that display information such as the weather, calendar events, system resource usage, and more. The widgets can be customized to display the information in any format and can be positioned anywhere on the desktop, allowing you to create a personalized and efficient workspace.

Übersicht widgets are created using a simple and user-friendly interface that allows you to preview and edit the widget’s code. The widgets are also highly customizable, allowing you to change the appearance and behavior of the widgets to suit your needs. Additionally, Übersicht widgets can be shared with other users, and there are a variety of widgets available for download from the developer’s website.

The application is open-source and free to use, and it’s also lightweight, it won’t affect your computer performance. Overall, Übersicht is a powerful and versatile tool for creating custom desktop widgets on macOS.

There are several other tools similar to Übersicht that allow you to create custom desktop widgets. Some of the most popular alternatives include:

With many external systems in play, its always crucial to keep an eye on things. I have build nice dashboards, using Grafana, InfluxDB, NetData and Prometheus. But they are either displayed in a browser window or on a separate screen.

If you have 2 / 3 monitors or an ultra-wide screen, you have a lot of Desktop real-estate you can use.

That is something that I have been looking at for years, but only tried for a short period of time. This year I want to really build out desktop and menu widgets , that help me to get an even better overview of things and help reduce repetitive tasks.

I will share links to things I like and share access to the widgets I build myself or tweaked.

These are the current things that are in progress or planned.

Nothing to share yet ….

ENGLISH: The heidelpay Group is one of the fastest growing German tech companies for international payment transactions. Founded by Mirko Hüllemann in 2003, the company relies on its own innovative solutions such as the secured invoice purchase, purchase by instalments, direct debit and online bank transfers. In addition, heidelpay also cooperates with more than 200 well-known providers of credit cards and wallet solutions.

As a payment institute approved by the German Federal Financial Supervisory Authority BaFin and with over 16 years of experience in e-commerce and at the POS in its favour, the heidelpay Group enables companies of all sizes to make payments worldwide. The full-service payment service provider covers the entire spectrum of electronic payment processing: from processing, procurement, monitoring and risk management to accounts receivable management. Its fully scalable and modular solutions are used by 30,000 national and international customers. The various payment methods are available for e-commerce, m-commerce and stationary points of sale. “

GERMAN: Die heidelpay Group ist eines der am stärksten wachsenden, deutschen Tech-Unternehmen für den internationalen Zahlungsverkehr. Das von Mirko Hüllemann im Jahr 2003 gegründete Unternehmen setzt dabei auf innovative, eigene Lösungen, wie Rechnung, Ratenkauf, Lastschrift und Online-Überweisung. Daneben arbeitet heidelpay mit über 200 namhaften Anbietern von Kreditkarten oder Wallet-Lösungen zusammen.

Als von der Bundesanstalt für Finanzdienstleistungsaufsicht (BaFin) zugelassenes Zahlungsinstitut mit über 16 Jahren Erfahrung im E-Commerce und am POS zu seinen Gunsten ermöglicht die heidelpay-Gruppe Unternehmen jeder Größenordnung den weltweiten Zahlungsverkehr. Der Full-Service-Payment-Dienstleister deckt das gesamte Spektrum der elektronischen Zahlungsabwicklung ab: von der Abwicklung über die Beschaffung, Überwachung und das Risikomanagement bis hin zum Forderungsmanagement. Die voll skalierbaren, modularen Lösungen werden von 30.000 nationalen und internationalen Kunden genutzt. Für E- Commerce, M-Commerce und den stationären Point of Sale stehen die verschiedenen Zahlungsmethoden zur Verfügung.

ENGLISH: On March 19th I took part in the Heidelpay Academy, to be able to support my customers in the integration of all innovative solutions offered by Heidelpay.

I have been a partner since last year and have already integrated direct debit via Heidelpay for a customer.

GERMAN: Am 19.03. habe ich an der Heidelpay Academy teilgenommen, um meinen Kunden in der Zukunft verstärkt bei der Einbindung der innovativen Lösungen von Heidelpay zu unterstützen.

Ich bin schon seit letztem Jahr Partner und habe bereits für einen Kunden das Lastschriftverfahren über Heidelpay integriert.

You might have heard about Structured Data, Schema.org and JSON-LD.

Search engines read structured data and use it to enhance search engine results. Structured data helps search engines to understand and categorize page content.

This structured data, in JSON-LD format, describes a simple Article.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

{ "@context": "http://schema.org", "@type": "Article", "author": "John Doe", "interactionStatistic": [ { "@type": "InteractionCounter", "interactionService": { "@type": "WebSite", "name": "Twitter", "url": "http://www.twitter.com" }, "interactionType": "http://schema.org/ShareAction", "userInteractionCount": "1203" }, { "@type": "InteractionCounter", "interactionType": "http://schema.org/CommentAction", "userInteractionCount": "78" } ], "name": "How to Tie a Reef Knot" } |

Schema.org is a collaborative, community activity with a mission to create, maintain, and promote schemas for structured data on the Internet. But not all structured data endpoints are actually used by Google, Bing or other search engines yet.

Google provides a detailed overview of structured data allowed and used for search results.

There are basic enhancements you can use, like the Article structured data above. There are also many other more specific uses, like Video, LocalBusiness, Events, FAQ, Job Postings, Recipe and so on. Bing also provides a basic overview, but their documentation is scattered and feels incomplete.

If you use a modern CMS, many structured data endpoints are already integrated out of the box (Article, Website, Logo, Person …).

Also modular content management systems often offer additional functionality through plugins, those help integrate structured data directly. Some do it better than others!

But if you really want to dive deep and integrate all those little things, structured data is still far more powerful when added manually. Especially things like events, products, job listings, courses, Q&A can greatly be enhanced by hand.

Alex@portalZINE

Google and Bing offer validation tools for structured data. Both integrate it into their Webmaster Tools. You can also use the JSON-LD Playground to validate the JSON-LD itself or RDFa Play, Structured Data Linter, Facebook Debugger, Schema.org Generator and many other tools.

I am a huge structured data fan and have been working with it for years now. I am constantly looking for new supported structured data endpoints, to enhance my own or customer websites & data.

Google constantly updates their documentation and highlights experimental structured data endpoints. Like Speakable for example, that highlights sections of a websites that are best suited for audio playback.

Fresh structured data helps to promote your content and enhance SEO, directly enhancing your discoverability and your search engine position. Your content becomes more meaningful for search engines, making it easier for them to promote it to the right potential user. It also ties into the GO GREEN concept, as you are reducing bounces of your website for users getting offered the wrong content.

Things like recipes and how-tos are already pushed to the top of the search index. A perfect way to promote your website and get noticed.

Together with my partners in crime (Dorit & Micha), we have finally opened our own personal online store.

We have been selling our single origin coffees (1st Single Malt Whisky Coffee, Basic – Single Origin Arabica, Kill me Quick Espresso -Single Origin Robusta), teas (Kräuterschorle – Kräutertee, Feuerkieker – Schwarztee) and rum (Fortune Teller – Double Aged Barbados Rum) using the Amazon Marketplace for the past 2 years.

GreenApe has been a side project for the past years and I never wanted to deal with the maintenance of our own store. But its time to move on and do our own thing. Amazon has removed so many useful features over the years or added a new fee on top of other fees. Even though Amazon provides access to a large amount of customers, for small companies the fees build up quickly.

With our own store we can finally do bundles, coupons again and better optimized shipping. It will also allow me to better testdrive some new interesting features for my customers ;) Yeah its kind of my new toy or shopping lab! Its fun being able to work on untested new SEO features, structured data, merchant tools, shopping ads and tracking of all of those.

We have been selling in Germany for the past 2 years, but that might be changing in the future depending how well the new store shapes up :)

If you live in Germany, love good coffee, tee or rum … say Hi!

GreenApe – Makes Your Life Better

Homepage

Shop

Contact us

Development today relies on multiple teams, services, and environments all working in unison. A topic that always comes up, when setting up a new development environment: How do we secure important credentials, while not making it too complicated for the rest of the team?

The key when working with version control systems like Git, is to keep any type of credentials out of the versioning system. These can be API keys, database or email passwords.

Even if its a private repository, development environments might change. It can be a simple staging & live website setup you are maintaining.

|

1 2 3 |

DB_HOST=localhost DB_USER=username DB_PASS=password |

The simplest way in PHP is to use .env files to store your credentials outside of the public accessible directory structure. So outside the public_html, but still within the reach of the executing environment to read it. Variables are accessible through $_ENV['yourVar'] or getenv("yourVar"), once included in your code.

To make it simple you can use the popular package vlucas/phpdotenv, which reads and imports the file automatically.

|

1 2 3 4 5 6 |

<?php require_once __DIR__.'/../vendor/autoload.php'; $dotenv = new Dotenv\Dotenv(__DIR__.'/../'); $dotenv->load(); ?> |

Don’t fool yourself, if an attacker finds a way into your system, these variables can be easily read. This is just hiding the file from public access and provides some convenience while developing or sharing code.

Some people propose to encrypt / decrypt environment variables using a secret key. But if an attacker can access your data, he can also find the secret key.

There are some nice packages that offer just that. You have to decide if those fit your ammo.

psecio/secure_dotenv library provides an easy way to handle the encryption and decryption of the information in your .envfile. @Githubjohnathanmiller/secure-env-php – Env encryption and decryption library. Prevent committing and exposing vulnerable plain-text environment variables in production environments. The lib provides a nice guided interface to encrypt your .env file. @Github beyondcode/laravel-credential – Add encrypted credentials to your Laravel production environment. You can edit and encrypt using php artisan credentials:edit. @GithubThe Apache2 environment variables are set in the /etc/apache2/envvars file. These variables are not the same as the environment variables of your Linux system; they are stored and manipulated in an internal Apache structure.

The /etc/apache2/envvars file holds variable definitions such as APACHE_LOG_DIR (the location of Apache log files), APACHE_PID_FILE (the Apache process ID), APACHE_RUN_USERS (the user that run Apache, by defaultwww-data), etc.

You can open and modify this file in a text editor of your choice. This is nice, but far from simple and requires a server restart. This is something which helps you when hardening security on a live deployed setup.

There are dynamic approaches, but you can do some research for that yourself :) Skipped that rabbit hole for now …

Handling secrets completely detached is another possibility. This is surely an overkill for most cases, but using an Infrastructure Secret Management concept might be worth looking into, if you are working on bigger scale projects that involve multiple development teams and setups. These services also often deal with secret rotation.

HashiCorp Vault – “Vault is a tool for securely accessing secrets. A secret is anything that you want to tightly control access to, such as API keys, passwords, certificates, and more. Vault provides a unified interface to any secret, while providing tight access control and recording a detailed audit log.”

You can deploy your own vault on your own infrastructure or test out a hosted version, which is free for Open Source projects. HashiCorp Vault

You will find a bunch of Hashicorp related packages that will help you to integrate a vault into your project workflow (scmrus/php-vault-env , poc-webapp-vault).

While this is nice, you will need to cache / store credentials somewhere, as you don’t want to query the vault on every single access.

The Hashicorp Vault is not the only Infrastructure Secret Management solution. There is a nice Github Gist that lists other solutions and a nice feature matrix.

Amazon also provides a solution called AWS Secrets Manager, which makes a lot of sense, when you build and deploy on AWS already :)