Create a system cron for WordPress, that is accessible and can be easily tweaked to provide more details. Here some basic information about crons and the tools I am going to use …

In WordPress, the term „cron“ refers to the system used for scheduling tasks to be executed at predefined intervals. The WordPress cron system allows various actions to be scheduled, such as publishing scheduled posts, checking for updates, sending email notifications, and running other scheduled tasks.

WordPress includes its own pseudo-cron system, which relies on visitors accessing your site. When a visitor loads a page on your WordPress site, WordPress checks if there are any scheduled tasks that need to be executed. If there are, it runs those tasks. This system works well for most sites, but it has limitations, particularly for low-traffic sites or sites that need precise scheduling.

To overcome these limitations, WordPress also provides the option to use a real cron system. With a real cron system, tasks are scheduled and executed independently of visitor traffic. This can be more reliable and precise than relying on visitors to trigger cron tasks.

To set up a real cron system for WordPress, you typically need to configure your server’s cron job scheduler to trigger the wp-cron.php file at regular intervals. This file handles the execution of scheduled WordPress tasks.

WP Crontrol is a solid UI, to list and see whats happening in the background.

WP-CLI (WordPress Command Line Interface) is a powerful command-line tool that allows developers and administrators to interact with WordPress websites directly through the command line, without needing to use a web browser.

It provides a wide range of commands for managing various aspects of a WordPress site, such as executing crons / scheduled tasks, installing plugins, updating themes, managing users, and much more.

Most WordPress hosts have it preinstalled. Installation

Test CRON

|

1 2 3 |

# Test WP Cron spawning system $ wp cron test Success: WP-Cron spawning is working as expected. |

Run CRON

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Schedule a new cron event $ wp cron event schedule cron_test Success: Scheduled event with hook 'cron_test' for 2024-01-31 10:19:16 GMT. # Run all cron events due right now $ wp cron event run --due-now Success: Executed a total of 2 cron events. # Delete all scheduled cron events for the given hook $ wp cron event delete cron_test Success: Deleted 2 instances of the cron event 'cron_test'. # List scheduled cron events in JSON $ wp cron event list --fields=hook,next_run --format=json [{"hook":"wp_version_check","next_run":"2024-01-31 10:15:13"},{"hook":"wp_update_plugins","next_run":"2016-05-31 10:15:13"},{"hook":"wp_update_themes","next_run":"2016-05-31 10:15:14"}] |

ntfy (pronounced notify) is a simple HTTP-based pub-sub notification service. It allows you to send notifications to your phone or desktop via scripts from any computer, and/or using a REST API.

You can host your own docker instance or use the hosted solution.

The documentation is detailed and offers many ways to tweak the resulting notification.

|

1 2 |

/** Disable virtual cron in wp-config.php */ define('DISABLE_WP_CRON', true); |

|

1 |

0 13 * * * wget -q -O - https://domain.com/wp-cron.php?doing_wp_cron >/dev/null 2>&1 |

I am not showing you how to create a system cron, that can vary depending on your hosting provider. Some of you will just setup / modify the crontab themselves. So here an example of how I use it these days …

|

1 |

*/30 * * * * OUTPUT=$(/bin/bash -c "/path/to/wp-cli/wp --path=/path/to/wp/install/ cron event run --url=https://your-website.com/if/multisite/ --due-now" 2>&1) && curl -u %ntfy_token% -H "Filename:your_cron_output.txt" -H "Title:Your Cron" -d "$OUTPUT" "https://ntfy.server.com/topic" |

Lets dissect this :)

See example usage on crontab.guru.

|

1 |

*/30 * * * * |

Im using a variable to capture the output, allowing me to pass it to ntfy.

|

1 |

OUTPUT=$() |

Use bash to launch wp-cli, passing in required parameters to make sure the right website is targeted.

I am using –due-now to only launch those schedules that are actually pending. >/dev/null 2>&1 prevents any emails to be send for this cron job, it redirects the error stream into the output stream. Always helpful to remove it for the first testdrive.

>/dev/null: redirects standard output (stdout) to /dev/null

2>&1: redirects standard error (2) to standard output (1), which then discards it as well since standard output has already been redirected :)

|

1 |

/bin/bash -c "/path/to/wp-cli/wp --path=/path/to/wp/install/ cron event run --url=https://your-website.com/if/multisite/ --due-now" >/dev/null 2>&1 |

This part sends the output to a ntfy instance / topic.

|

1 2 3 4 5 |

curl -u %ntfy_token% -H "Filename:your_cron_output.txt" -H "Title:Your Cron" -d "$OUTPUT" "https://ntfy.server.com/topic" |

You can set many other things as well, like tags, images …. Check the documentation about publishing for more options. You can even redirect to an email account ;)

|

1 2 3 |

if ( defined( 'WP_CLI' ) && WP_CLI ) { // Do WP-CLI specific things. } |

|

1 2 3 4 |

if ( defined( 'DOING_CRON' ) ) { // Do something } |

Enjoy coding …

Alex

I had the chance this year to meetup with my client Thomas Dowson from „Archaeology Travel Media“ at the Travel Innovation Summit in Seville.

Over the past 2 years we have been revamping all the content from archaeology-travel.com and integrated a sophisticated travel itinerary builder system into the mix. We are almost feature complete and are currently fine-tuning the system. New explorers are welcome to signup and testdrive our set of unique features.

It was so nice to finally meet the whole team in person and celebrate what we have accomplished together so far.

Directly taken from the front-page :)

„EXPLORE THE WORLD’S PASTS WITH ARCHAEOLOGY TRAVEL GUIDES, CRAFTED BY EXPERIENCED ARCHAEOLOGISTS & HISTORIANS

Whatever your preferred style of travel, budget or luxury, backpacker or hand luggage only, slow or adventure, if you are interested in archaeology, history and art this is an online travel guide just for you.

Here you will find ideas for where to go, what sites, monuments, museums and art galleries to see, as well as information and tips on how to get there and what tickets to buy.

Our destination and thematic guides are designed to assist you to find and/or create adventures in archaeology and history that suit you, be it a bucket list trip or visiting a hidden gem nearby.“

More Details

About

Mission & Vision

Code of Ethics

We are constantly expanding our set of curated destinations, locations and POIs. Our plan is it, to make it even easier to find unique places for your next travel experience.

We are also working on partnerships to enhance travel options and offer a even broader variety of additional content.

Looking forward to all the things to come, as well as to the continued exceptional collaboration between all team members.

First a bit of context :)

Translation within WordPress is based of Gettext. Gettext is a software internationalization and localization (i18n) framework used in many programming languages to facilitate the translation of software applications into different languages. It provides a set of tools and libraries for managing multilingual strings and translating them into various languages.

The primary goal of Gettext is to separate the text displayed in an application from the code that generates it. It allows developers to mark strings in their code that need to be translated and provides mechanisms for extracting those strings into a separate file known as a „message catalog“ or „translation template.“

The translation template file contains the original strings and serves as a basis for translators to provide translations for different languages. Translators use specialized tools, such as poedit or Lokalize, to work with the message catalog files. These tools help them associate translated strings with their corresponding original strings, making the translation process more manageable.

At runtime, Gettext libraries are used to retrieve the appropriate translated strings based on the user’s language preferences and the available translations in the message catalog. It allows applications to display the user interface, messages, and other text elements in the language preferred by the user.

Gettext is widely used in various programming languages, including C, C++, Python, Ruby, PHP, and many others. It has become a de facto standard for internationalization and localization in the software development community due to its flexibility and extensive support in different programming environments.

WordPress uses dedicated language files to store translations of your strings of text in other languages. Two formats are used:

There has been a discussion for years, if it makes sense to cache mo-files, to speedup WordPress when multiple languages are in use. Discussion of the WordPress Core team.

There have been a couple of plugins trying to fix this and prevent reloading of language files on every pageload.

Most of these use transients in your database and object caches if active.

Well it all depends on the amount of language files and your infrastructure. This load/parse operation is quite CPU-intensive and does spend quite a significant amount of time.

I have decided, not to bother with it in the past :) But I am always looking for ways to speed up multi-lingual websites, as that is my daily bread & butter ;) Checkout Index WP MySQL For Speed & Index WP Users For Speed, which optimizes the MySQL Indexes for optimal speed. That change, really does make a bit difference!

One thing that can help to speed up things, is to use the native gettext extension and not the WordPress integration of it. This will indeed help speedup translation processing and help big multilingual websites.

Native Gettext for WordPress by Colin Leroy-Mira, provides just that.

Create a must use plugin and add this:

|

1 2 3 |

<?php /** Plugin Name: Disable Gettext */ add_filter('override_load_textdomain','__return_true'); |

This will prevent any .mo file from loading.

Please consider the performance impact. Read about it at the main documentation.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

<?php /** * Change text strings * * @link http://codex.wordpress.org/Plugin_API/Filter_Reference/gettext */ function my_text_strings( $translated_text, $text, $domain ) { switch ( $translated_text ) { case 'Sale!' : $translated_text = __( 'Clearance!', 'woocommerce' ); break; case 'Add to cart' : $translated_text = __( 'Add to basket', 'woocommerce' ); break; case 'Related Products' : $translated_text = __( 'Check out these related products', 'woocommerce' ); break; } return $translated_text; } add_filter( 'gettext', 'my_text_strings', 20, 3 ); |

Enjoy ….

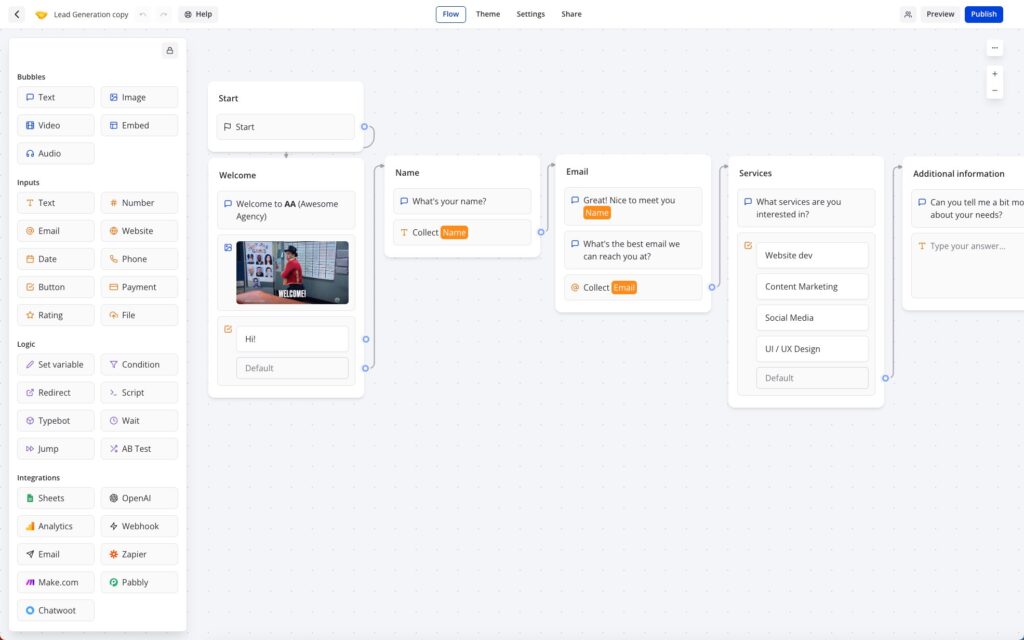

Typebot is a free and open-source platform that lets you create conversational apps and forms, which can be embedded anywhere on your website or mobile apps.

It provides various features like text, image, and video bubble messages, input fields for various data types, native integrations, conditional branching, URL redirections, beautiful animations, and customizable themes.

You can embed it as a container, popup, or chat bubble with the native JS library and get in-depth analytics of the results in real-time.

Typebot can be used in the Cloud (free tier available) or installed via Docker.

It drag & drop builder makes it amazingly fast to build your personal chatbot. It provides a ton of integrations (Google Analytics, Google Sheets, OpenAI, Zapier, Webhooks, Email, Chatwood) out of the box or you can connect other things via your own API Requests.

I have been a longtime user of RocketChat and build my own chatbots using Hubot or BotPress.

RocketChat

„Rocket.Chat is a free and open-source team chat platform that enables real-time communication between team members. It provides team messaging, audio and video conferencing, screen sharing, file sharing, and other collaboration features. It offers a familiar chat interface that makes it easy for teams to stay connected and collaborate effectively.

Rocket.Chat is highly customizable and extensible, with a large number of community-contributed plugins and integrations. It supports multiple platforms, including web, desktop, and mobile, making it accessible to teams regardless of their device or location. It also offers end-to-end encryption for secure communication, and compliance with various industry regulations such as HIPAA, GDPR, and others.“

Hubot

„Hubot is a popular open-source chatbot framework developed by GitHub. It allows developers to build and deploy custom chatbots that can automate tasks, integrate with various APIs, and enhance team communication and collaboration.

Hubot is built on Node.js and can be extended with various plugins and scripts, making it highly customizable and flexible. It comes with many built-in plugins, including integration with popular chat platforms like Slack, HipChat, and Campfire, as well as various APIs like Twitter, GitHub, and Google.

Hubot enables developers to build chatbots that can perform various tasks, such as scheduling meetings, deploying code, fetching data, and responding to user queries. It also provides a command-line interface for managing and interacting with the chatbot.“

Botpress

„Botpress is an open-source platform that allows you to build and deploy chatbots and conversational interfaces. It provides a user-friendly interface and a visual flow editor that allows you to create and manage your chatbot’s conversation flow. Botpress comes with many pre-built components and integrations, including natural language processing (NLP) engines, chat widgets, and webhooks, making it easy to build complex chatbots with advanced functionality.

Botpress offers features like machine learning-based NLP, conversational analytics, multi-language support, customizable themes, and a modular architecture that allows you to add custom functionalities with ease. It supports multiple channels, including web, Facebook Messenger, Slack, and WhatsApp, allowing you to reach your audience wherever they are.“

Typebot beats Botpress and could be the reason why I might be skipping RocketChat as well. Not sure I want to build an integration for RocketChat myself.

Base YAML Docker-Compose File to get you started. This will setup a Postgres instance, the typebot builder and typebot viewer.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

version: '3.3' services: typebot-db: image: postgres:13 restart: always volumes: - db_data:/var/lib/postgresql/data environment: - POSTGRES_DB=typebot - POSTGRES_PASSWORD=typebot typebot-builder: image: baptistearno/typebot-builder:latest restart: always depends_on: - typebot-db ports: - '8080:3000' extra_hosts: - 'host.docker.internal:host-gateway' # See https://docs.typebot.io/self-hosting/configuration for more configuration options environment: - DATABASE_URL=postgresql://postgres:typebot@typebot-db:5432/typebot - NEXTAUTH_URL=<your-builder-url> - NEXT_PUBLIC_VIEWER_URL=<your-viewer-url> - ENCRYPTION_SECRET=<your-encryption-secret> - ADMIN_EMAIL=<your-admin-email> typebot-viewer: image: baptistearno/typebot-viewer:latest restart: always ports: - '8081:3000' # See https://docs.typebot.io/self-hosting/configuration for more configuration options environment: - DATABASE_URL=postgresql://postgres:typebot@typebot-db:5432/typebot - NEXT_PUBLIC_VIEWER_URL=<your-viewer-url> - ENCRYPTION_SECRET=<your-encryption-secret> volumes: db_data: |

I am a huge Docker fan and run my own home and cloud server with it.

„Docker is a platform that allows developers to create, deploy, and run applications in containers. Containers are lightweight, portable, and self-sufficient environments that can run an application and all its dependencies, making it easier to manage and deploy applications across different environments. Docker provides tools and services for building, shipping, and running containers, as well as a registry for storing and sharing container images.

With Docker, developers can package their applications as containers and deploy them anywhere, whether it’s on a laptop, a server, or in the cloud. Docker has become a popular technology for DevOps teams and has revolutionized the way applications are developed and deployed.“

I am always looking for new ways to document the tools I use. This might help others to find interesting projects to enhance their own work or hobby life :)

I will have multiple series of this kind. I am starting with Docker this week, as it is at the core / a hub for many things I do. I often testdrive things locally, before deploying them to the cloud.

I am not concentrating on the installation of Docker itself, there are so many articles about that out there. You will have no problem to find help articles or videos detailing it for your platform.

Docker Compose and Docker CLI (Command Line Interface) are two different tools provided by Docker, although they are often used together.

Docker CLI is a command-line interface tool that allows users to interact with Docker and manage Docker containers, images, and networks from the terminal. It provides a set of commands that can be used to create, start, stop, and manage Docker containers, as well as to build and push Docker images.

Docker Compose, on the other hand, is a tool for defining and running multi-container Docker applications. It allows users to define a set of services and their dependencies in a YAML file and then start and stop the entire application with a single command. Docker Compose also provides a way to manage the lifecycle of the containers as a group, including scaling up and down the number of containers.

I prefer the use of Docker Compose, as it makes it easy to replicate and tweak a setup between different servers.

There are tools like $composerize, which allow you to easily transform a CLI command into a composer file. Also a nice way to easily combine multiple commands into a clean configuration.

Portainer is an open-source container management tool that provides a web-based user interface for managing Docker environments. With Portainer, users can easily deploy and manage containers, images, networks, and volumes using a graphical user interface (GUI) instead of using the Docker CLI. Portainer also provides features for monitoring container and system metrics, creating and managing container templates, and configuring and managing Docker Swarm clusters.

Portainer is designed to be easy to use and to provide a simple and intuitive interface for managing Docker environments. It supports multiple Docker hosts and allows users to switch between them easily from the GUI. Portainer also provides role-based access control (RBAC) to manage user access and permissions, making it suitable for use in team environments.

Portainer can be installed as a Docker container and can be used to manage both local and remote Docker environments. It is available in two versions: Portainer CE (Community Edition) and Portainer Business. Portainer CE is free and open-source, while Portainer Business provides additional features and support for enterprise users.

Portainer is my tool of choice, as it allows to create stacks. A stack is a collection of Docker services that are deployed and managed as a single entity. A stack is defined in a Compose file (in YAML format) that specifies the services and their configurations.

When a stack is deployed, Portainer creates the required containers, networks, and volumes and starts the services in the stack. Portainer also monitors the stack and its services, providing status updates and alerts in case of issues or failures.

As I said, its important for me to easily transfer a single container or stack to another server. The stack itself can be easily copied and reused. But do we easily export the setup of a current single docker file into a docker-compose file?

docker-autocompose to the rescue! This docker image allows you to generate a docker-compose yaml definition from a docker container.

|

1 |

docker pull ghcr.io/red5d/docker-autocompose:latest |

Export single or multiple containers

|

1 |

docker run --rm -v /var/run/docker.sock:/var/run/docker.sock ghcr.io/red5d/docker-autocompose <container-name-or-id> <additional-names-or-ids>... |

Export all containers

|

1 |

docker run --rm -v /var/run/docker.sock:/var/run/docker.sock ghcr.io/red5d/docker-autocompose $(docker ps -aq) |

This has been a great tool to also quickly backup all relevant container information. Apart from the persistent data, the most important information to quickly restore a setup if needed.

Backup , backup … backup! Learned my lesson, when it comes to restoring docker setups ;) Its so easy to forget little tweaks you did to the setup of a docker container.

Starting tomorrow …

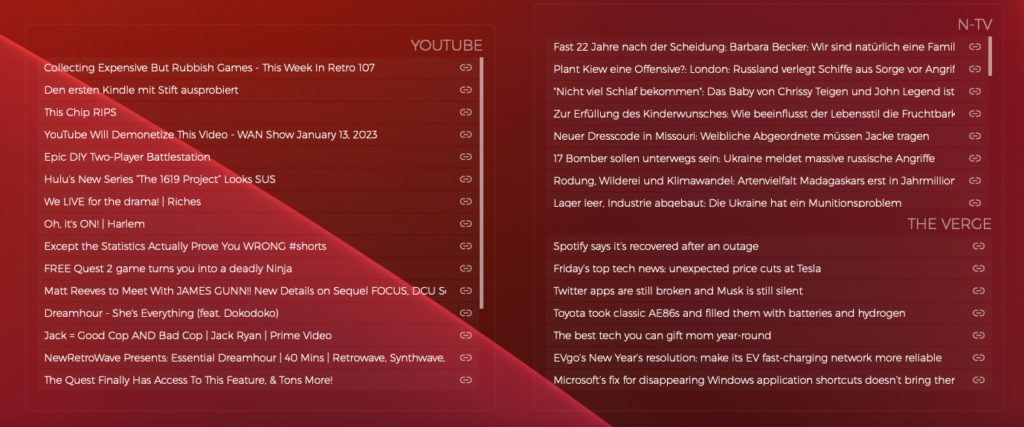

„Übersicht“ is a German word that means „overview“ or „summary“ in English. The name of the software was chosen because it allows users to have a quick overview of various information on their desktop. It’s also a play on words, because in German the name of the software can be translated as „super sight“ which refers to the ability to have a lot of information at a glance.

Übersicht is a lightweight and powerful application that allows users to create and customize desktop widgets using web technologies such as HTML, CSS, and JavaScript. With Übersicht, you can create widgets that display information such as the weather, calendar events, system resource usage, and more. The widgets can be customized to display the information in any format and can be positioned anywhere on the desktop, allowing you to create a personalized and efficient workspace.

Übersicht widgets are created using a simple and user-friendly interface that allows you to preview and edit the widget’s code. The widgets are also highly customizable, allowing you to change the appearance and behavior of the widgets to suit your needs. Additionally, Übersicht widgets can be shared with other users, and there are a variety of widgets available for download from the developer’s website.

The application is open-source and free to use, and it’s also lightweight, it won’t affect your computer performance. Overall, Übersicht is a powerful and versatile tool for creating custom desktop widgets on macOS.

There are several other tools similar to Übersicht that allow you to create custom desktop widgets. Some of the most popular alternatives include:

With many external systems in play, its always crucial to keep an eye on things. I have build nice dashboards, using Grafana, InfluxDB, NetData and Prometheus. But they are either displayed in a browser window or on a separate screen.

If you have 2 / 3 monitors or an ultra-wide screen, you have a lot of Desktop real-estate you can use.

That is something that I have been looking at for years, but only tried for a short period of time. This year I want to really build out desktop and menu widgets , that help me to get an even better overview of things and help reduce repetitive tasks.

I will share links to things I like and share access to the widgets I build myself or tweaked.

These are the current things that are in progress or planned.

Nothing to share yet ….

„Git is a DevOps tool used for source code management. It is a free and open-source version control system used to handle small to very large projects efficiently. Git is used to tracking changes in the source code, enabling multiple developers to work together on non-linear development.“

Many use Github to host remote Git repositories, but you can use other hosts like: BitBucket, Gitlab, Google Cloud Source Repositories …. You can also host your own repository on your local NAS or remote server.

Will use this article for common problems I encoutered and possible solutions.

Push failed in Github Desktop and using Push from the command-line. The best bet is to experiment with different git config options.

The core.compression 4 worked for me and the push went through nicely.

|

1 2 3 4 5 |

git config --global core.compression 0 git config --global core.compression 1 git config --global core.compression 2 git config --global core.compression 3 git config --global core.compression 4 |

Other config option that can be tweaked are

|

1 2 3 |

git config --global http.postBuffer git config --global https.postBuffer git config --global ssh.postBuffer |

Read more about them here

This is not a tutorial, but more like sharing a nice geeky road-trip ;)

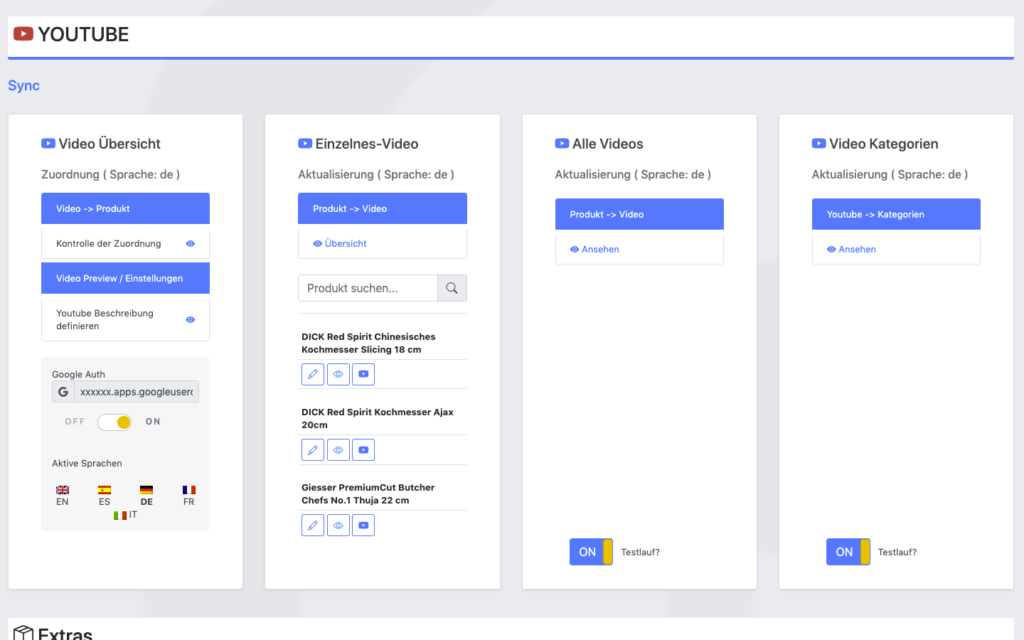

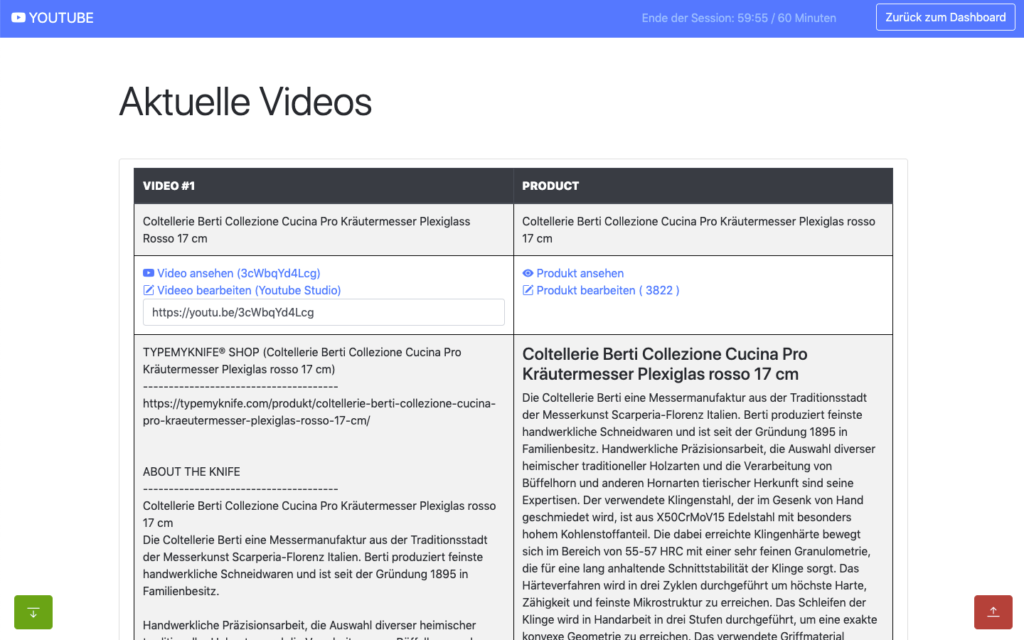

I have a pretty good understanding of the Youtube Data API, as I have actively used it on portalZINE TV in the past, to upload videos and dynamically link them to my local post-types.

For one of my latest customer projects (TYPEMYKNIFE / typemyknife.com), the task was a bit more complicated and the goal was to make it as future-proof as it can be with the Google APIs :)

Prerequisites / References to get you started:

The goal for the setup was to actively synchronize WooCommerce products with linked / attached videos, with their source at Youtube.

As the website is multilingual, WPML integration is critical as well. And as Youtube allows localization of title and description, that can be added into the mix quiet easily in the future ;)

The following product attributes should be mirrored and optimised for Youtube:

The following attributes should be integrated into the description to enrich the Youtube description:

All of these attributes will be collected internally and assigned using a simple template system, which allows the customer to move parts around freely and freely layout the description for Youtube.

The following stats will be collected for review:

Youtube SEO

These are the relevant key aspects, that help to get your videos more views.

In the past access to the Youtube Data API was far easier and less limited, when it comes to offline / none expiring OAuth2 refresh tokens.

When you are building a server-side application that is only available to your customer or moderators, it makes no sense to run that app through the Google App verification. Your app will never be used in public.

The Youtube Data API and its scopes, are defined as sensitive and therefor require third-party security assessment for public access.

The scopes I am requesting are https://www.googleapis.com/auth/youtube.upload + https://www.googleapis.com/auth/youtube.

Because of that its far easier to just setup OAuth 2 in test mode and restrict access to your customer and specific additional accounts only (up to 100 test users allowed). What all these account need, is access to your own or Brand Youtube Channel.

Preparation in the Google Cloud Console:

A detailed description can be found here.

You can circumvent verification for the consent screen, by using an organisation setup at Google. Here some infos about that. With that setup offline refresh tokens should work fine.

Update: Just tried that, but wont work with a branded youtube account, even though the cloud user has admin access to it. Not giving up yet, but Google / Youtube really makes it difficult to just have a simple offline solution for specific tasks ;) BTW also forced the login hint, to make sure the right account is logged in : $client->setLoginHint(‚YourWoreksapceAccount‘); !

You might have heard of the „The League of Extraordinary Packages„. It is a group of developers who have banded together to build solid, well tested PHP packages using modern coding standards.

They also offer an OAuth2-client + OAuth2 Google extension that can be used.

On the server, the Google API PHP SDK can be easily integrated using Composer.

In my customer plugin I neatly separated all relevant areas in classes & traits:

You can check the expiry time of your access token by accessing:

https://www.googleapis.com/oauth2/v1/tokeninfo?access_token=YOUR_TOKEN„A Google Cloud Platform project with an OAuth consent screen configured for an external user type and a publishing status of „Testing“ is issued a refresh token expiring in 7 days.“ – Google

Basic Auth example from the SDK:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 |

<?php // Call set_include_path() as needed to point to your client library. set_include_path($_SERVER['DOCUMENT_ROOT'] . '/directory/to/google/api/'); require_once 'Google/Client.php'; require_once 'Google/Service/YouTube.php'; session_start(); /* * You can acquire an OAuth 2.0 client ID and client secret from the * {{ Google Cloud Console }} <{{ https://cloud.google.com/console }}> * For more information about using OAuth 2.0 to access Google APIs, please see: * <https://developers.google.com/youtube/v3/guides/authentication> * Please ensure that you have enabled the YouTube Data API for your project. */ $OAUTH2_CLIENT_ID = 'XXXXXXX.apps.googleusercontent.com'; $OAUTH2_CLIENT_SECRET = 'XXXXXXXXXX'; $REDIRECT = 'http://localhost/oauth2callback.php'; $APPNAME = "XXXXXXXXX"; $client = new Google_Client(); $client->setClientId($OAUTH2_CLIENT_ID); $client->setClientSecret($OAUTH2_CLIENT_SECRET); $client->setScopes('https://www.googleapis.com/auth/youtube'); $client->setRedirectUri($REDIRECT); $client->setApplicationName($APPNAME); $client->setAccessType('offline'); // Define an object that will be used to make all API requests. $youtube = new Google_Service_YouTube($client); if (isset($_GET['code'])) { if (strval($_SESSION['state']) !== strval($_GET['state'])) { die('The session state did not match.'); } $client->authenticate($_GET['code']); $_SESSION['token'] = $client->getAccessToken(); } if (isset($_SESSION['token'])) { $client->setAccessToken($_SESSION['token']); echo '<code>' . $_SESSION['token'] . '</code>'; } // Check to ensure that the access token was successfully acquired. if ($client->getAccessToken()) { try { // Call the channels.list method to retrieve information about the // currently authenticated user's channel. $channelsResponse = $youtube->channels->listChannels('contentDetails', array( 'mine' => 'true', )); $htmlBody = ''; foreach ($channelsResponse['items'] as $channel) { // Extract the unique playlist ID that identifies the list of videos // uploaded to the channel, and then call the playlistItems.list method // to retrieve that list. $uploadsListId = $channel['contentDetails']['relatedPlaylists']['uploads']; $playlistItemsResponse = $youtube->playlistItems->listPlaylistItems('snippet', array( 'playlistId' => $uploadsListId, 'maxResults' => 50 )); $htmlBody .= "<h3>Videos in list $uploadsListId</h3><ul>"; foreach ($playlistItemsResponse['items'] as $playlistItem) { $htmlBody .= sprintf('<li>%s (%s)</li>', $playlistItem['snippet']['title'], $playlistItem['snippet']['resourceId']['videoId']); } $htmlBody .= '</ul>'; } } catch (Google_ServiceException $e) { $htmlBody .= sprintf('<p>A service error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } catch (Google_Exception $e) { $htmlBody .= sprintf('<p>An client error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } $_SESSION['token'] = $client->getAccessToken(); } else { $state = mt_rand(); $client->setState($state); $_SESSION['state'] = $state; $authUrl = $client->createAuthUrl(); $htmlBody = <<<END <h3>Authorization Required</h3> <p>You need to <a href="$authUrl">authorise access</a> before proceeding.<p> END; } ?> <!doctype html> <html> <head> <title>My Uploads</title> </head> <body> <?php echo $htmlBody?> </body> </html> |

A simple upload example can be found here .

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

try{ // REPLACE this value with the video ID of the video being updated. $videoId = "VIDEO_ID"; // Call the API's videos.list method to retrieve the video resource. $listResponse = $youtube->videos->listVideos("snippet", array('id' => $videoId)); // If $listResponse is empty, the specified video was not found. if (empty($listResponse)) { $htmlBody .= sprintf('<h3>Can\'t find a video with video id: %s</h3>', $videoId); } else { // Since the request specified a video ID, the response only // contains one video resource. $video = $listResponse[0]; $videoSnippet = $video['snippet']; $tags = $videoSnippet['tags']; // Preserve any tags already associated with the video. If the video does // not have any tags, create a new list. Replace the values "tag1" and // "tag2" with the new tags you want to associate with the video. if (is_null($tags)) { $tags = array("tag1", "tag2"); } else { array_push($tags, "tag1", "tag2"); } // Set the tags array for the video snippet $videoSnippet['tags'] = $tags; // Update the video resource by calling the videos.update() method. $updateResponse = $youtube->videos->update("snippet", $video); $responseTags = $updateResponse['snippet']['tags']; $htmlBody .= "<h3>Video Updated</h3><ul>"; $htmlBody .= sprintf('<li>Tags "%s" and "%s" added for video %s (%s) </li>', array_pop($responseTags), array_pop($responseTags), $videoId, $video['snippet']['title']); $htmlBody .= '</ul>'; } } catch (Google_Service_Exception $e) { $htmlBody .= sprintf('<p>A service error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } catch (Google_Exception $e) { $htmlBody .= sprintf('<p>An client error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } |

All operations to and from the Youtube Data API are rate limited. What is important for us, are the queries per day.

The default quota is 10.000 queries per day, sounds a lot, but is easily gone after updating 150-200 videos. You can request this limit to be raised, but again a lot of paperwork and questions that are just not needed.

The above limit just means, that you need to cache as many queries as possible, to only query live when needed ;)

Something you learn fast, when experimenting with different things! I hit that limit multiple times in the first few days, with around 500 videos in the queue.

Different operation cost you different amount of units

It also helps to use the Google Developer Playground to testdrive the Youtube Data API with your own credentials while optimising your own code.

You can define your own OAuth 2.0 configuration by clicking the cog in the upper right corner.

I setup the bulk updating to allow splitting it over multiple days, if required. For this an offline refresh token is needed, as the standard token expires after 60 minutes.

My customer can also just update a single video, when changes are applied to the product or a new product has been added.

If more frequent updates are required, I will ask for a raise of the queries per day. You can circumvent the limit by using multiple Google Cloud Platform accounts with new OAuth credentials, but really an overkill right now. I have done that in the past ;)

The GUI is just based of Bootstrap, to make it simple and clean. Using my own wrapper to make it work within the WordPress admin.

For all ajax operations, I am using htmx and _hyperscript, which I will talk about in another article in the future.

Really neat and clean way to build single page interfaces.

The whole plugin runs of its own REST API endpoint. Just love using WordPress as a headless system.

I used TWIG / Timber for the templates, to separate logic and layout. Timber has been my goto solution for years now. It drives my own and many customer websites.

This has been a lot of fun, maybe a bit too much LOL

I do geek-out about many of my projects, but this experience helped me to bring my WordPress toolbox to the next level. This will help to drive other things in the future.

Working so deeply with the Youtube Data API has been fun and feels so easy now, after all remaining problems have been solved.

Would have loved this during my portalZINE TV days ;)

I you read all this, you just earned yourself a badge for completion ;)

Need something similar or something else? Just say hi and we can talk.

„WPML (WordPress Multilingual) makes it easy to build multilingual sites and run them. It’s powerful enough for corporate sites, yet simple for blogs.“ – WPML

I have been running and setting up multilingual websites for more than 12 years. WordPress and related integrations have gladly come a long way to make our life’s a lot easier.

For basic content WPML is almost plug & play, but I do see more and more sites / customers struggling with more complex setups. WPML is one of the most popular multilingual plugins and is used on x00.000 of websites.

Just so you know, WPML is a commercial solution!

The amount of settings has increased a lot over the years and offers possible solutions for almost any content / plugin setup.

But for more complex setups, I would suggest to hire a professional to look over the settings or study the plugin documentation carefully.

Especially with a lot of content, it can quickly increase problems and the need to revisit specific content over and over again.

WPML lets you translate any text that comes from themes / theme frameworks (DIVI, Elemetor, Gutenberg …), plugins, menus, slugs, SEO and additionally supported integrations (Gravity Forms, ACF, WooCommerce …).

You can translate content internally for yourself, using translation management to translate with an internal team of translators or get help from external translators / translation services.

The latest version also offers AI translations, which allows you to get a decent start for most of your content.

In addition to the above, WPML String Translation allows you to translate texts that are not in posts, pages and taxonomy. This includes the site’s tagline, general texts in admin screens, widget titles and many other areas.

Well, I am a bit biased. I have not looked much at other solutions for the past 5 years, as it offers all I really need.

I have used it on projects from 2 to 15 languages and it scales nicely. At least with proper hosting attached!

Anything can be tweaked through the API, Hooks and custom integrations. I have build additional WPML tools for my customers, to streamline some of the repeating / boring tasks.

Their support is responsive and the forum already provides a huge amount of answers to most of the questions that might come up.

If you develop / maintain multiple customer websites with multilingual content, the investment is quickly

amortized. I do offer WPML to my maintenance package customers, maybe something to consider ;)

Its an essential solution in my WP toolbox.

WPML 4.5 is on its way and will include a „Translate Everything“ feature, among other fixes and enhancements.

Translate Everything allows you to translate all of your site’s content automatically as you create it. You can then review the translations on the front-end before publishing.

VR has not just arrived, but it finally arrived for me and the masses ;)

I have been watching VR evolving from the sidelines for the past few years. Its been a fun ride, from the first prototypes to what we have now.

The biggest problem in the past, has been image quality and the huge upfront investment for me. With the latest generation all of this has completely changed.

I am constantly keeping up with new technologies and have been diving into WebVR for some time now.

Its so easy to export your own simple Unity VR project into WebVR and integrate it into your own web projects.

Unreal Engine also provides options to work on VR projects. Or build upon the WebXR API.

Microsoft also has a foot in the door, with MRTK (Allows to integrate teleporting easily / Releases).

With the announcement of the Oculus Quest 2 last year, I finally decided to dive in myself. Standalone VR allows me to concentrate on WebVR and experiment, while still having the option to expand into the linked PC-universe in the future as well.

I have no plans to invest into a beefy gaming rig yet, but have been trying out cloud solutions using Shadow and Paperspace Gaming / Paperspace $10 Coupon (KS4Q2TA).

Update: Latency is the biggest problem with these solutions and it can be a hit & miss! My Paperspace experience was close to perfect for pure desktop experiences, for VR sadly not :) Can not testdrive Shadow right now, as the waitinglist is already showing September 2021 LOL CRAZY! Local latency can be greatly optimized by using Wi-Fi 6 or Wi-Fi Direct.

With the current GPU prices, building a machine makes hardly any sense. I would love to build a small form-factor PC with a Shuttle XPC for example ;) Maybe later this year ….

I invested in VR-Comfort for now ;) I transformed the Oculus Quest 2 into a FrankenQuest with the DAS-Mod (HTC VR VIVE Deluxe Audio Strap). Loving the new look, audio and perfect weight balance :) Perfect for longer sessions.

I am officially infected. I decided to upgrade an old computer to minimum VR specs, to at least tryout PC-VR :)

The final build is ghetto and really a tight fit, but it works perfectly :)

For 2021 and the current shortages, this is a big win! The whole upgrade was about 400 EUR, with 250 EUR for the new GPU.

Here are my current PC-VR specs:

| Area | Before | After / Comment |

|---|---|---|

| PC | Fujitsu P900 – i3 | Nice clean case. Mainboard D2990 (ultra small μATX). Really tiny! This would normally not be my dream mainboard, but I am using what I have :) Trying to keep costs low. |

| Power Supply | Stock | EVGA 600 W1. Better cooling and needed power for the GPU. |

| CPU | i3 – 2120 | i7 2600K . Big change in overall performance. |

| CPU Cooler & Fan | Stock | Be Quiet Pure Rock Slim BK030. (Had to do some mods to install it) |

| RAM | 8GB | Enough for now. |

| GPU | NVIDIA 1030 GTX – Low profile | NVIDIA 1060 6gb Inno3D. That card takes up all slots, had to add a riser card to play with some USB 3.0 cards :) |

| USB3 | USB2 | Inateck PCIe USB 3.0 – KTU3FR-4P , again connected via a riser card! Make sure that the card gets proper power (green light on the card itself), that is why another Inateck card failed to connect or had random disconnects ;) That card charges and connects perfectly with the Oculus Quest 2. I was almost giving up and glad I found a working solution ;) |

| USB LINK | Using a 3m long cable from KIWI Design, works without any problems and has secure fit on the Oculus Quest 2 | |

| Bluetooth | – | Bluetooth 4.0 – Asus BT-400 |

All that is required, has been added and now the PC VR universe is open for exploration :) As hardware is getting more expensive every week, I updated a second machine with comparable specs and have 2 machines that can run VR with entry /decent specs :)

There are many new platforms providing access to new tools and often an easy access to a broader community. Some of them with nice build tools in VR.

„OpenXR is an open, royalty-free standard for access to virtual reality and augmented reality platforms and devices. It is developed by a working group managed by the Khronos Group consortium.“

You will find a bunch of efforts on the way, to build the next open multi-platform VR solution.

Building with Unity is always an option, but not the best solution for those that are just getting started or for those that just want a simple starting point to experiment ;)

Another evolving area is the office space. Some of the platforms above already dive into that area, like XRDesktop. But Oculus / Facebook itself is working on its Infinite Office integration.

Other solutions help to mirror your PC within VR and open new ways for collaboration:

The biggest problem is the mirroring of the keyboard. As soon as that is solved, this might become usable. Immerse VR provides an option to overlay a virtual representation of your keyboard, by mapping your real-life keyboard in VR.

Always important to stay informed. Here some communities are frequently visit:

VR is here to stay, I would have never though it would take off 2020 / 2021. But we all face new challenges and technology is evolving to make space for new possibilities.

While gaming / fitness / social are the entry point for VR currently, this whole market will expand quickly in 2021.

Really looking forward to new possibilities and another facet of my developer life.

Looking forward meeting some of you in the VR-Multiverse :)