I had the chance this year to meetup with my client Thomas Dowson from „Archaeology Travel Media“ at the Travel Innovation Summit in Seville.

Over the past 2 years we have been revamping all the content from archaeology-travel.com and integrated a sophisticated travel itinerary builder system into the mix. We are almost feature complete and are currently fine-tuning the system. New explorers are welcome to signup and testdrive our set of unique features.

It was so nice to finally meet the whole team in person and celebrate what we have accomplished together so far.

Directly taken from the front-page :)

„EXPLORE THE WORLD’S PASTS WITH ARCHAEOLOGY TRAVEL GUIDES, CRAFTED BY EXPERIENCED ARCHAEOLOGISTS & HISTORIANS

Whatever your preferred style of travel, budget or luxury, backpacker or hand luggage only, slow or adventure, if you are interested in archaeology, history and art this is an online travel guide just for you.

Here you will find ideas for where to go, what sites, monuments, museums and art galleries to see, as well as information and tips on how to get there and what tickets to buy.

Our destination and thematic guides are designed to assist you to find and/or create adventures in archaeology and history that suit you, be it a bucket list trip or visiting a hidden gem nearby.“

More Details

About

Mission & Vision

Code of Ethics

We are constantly expanding our set of curated destinations, locations and POIs. Our plan is it, to make it even easier to find unique places for your next travel experience.

We are also working on partnerships to enhance travel options and offer a even broader variety of additional content.

Looking forward to all the things to come, as well as to the continued exceptional collaboration between all team members.

In SEO (Search Engine Optimization), there is a concept called „E-A-T“ which stands for „Expertise, Authoritativeness, and Trustworthiness“.

Google uses E-A-T as one of its many ranking factors to evaluate the quality of content on the web. Websites that consistently produce high-quality content that meets the E-A-T criteria are more likely to rank well in search engine results pages (SERPs).

E-A-T is not a specific algorithm or ranking factor that Google uses, but rather a framework or set of guidelines that Google’s quality raters use to evaluate the quality of content on the web. These quality raters assess the content using E-A-T as a benchmark, and their feedback helps Google improve its search algorithms.

It is especially important for content that falls under the category of YMYL (Your Money or Your Life), which includes content related to health, finance, legal, and other topics that can impact people’s well-being or financial stability. Google holds this type of content to a higher standard because inaccurate or misleading information can have serious consequences.

Building E-A-T for your website or content involves a multi-faceted approach that includes creating high-quality, informative, and engaging content, establishing your expertise and authority in your field, and building trust with your audience through transparency and honesty.

Some specific actions you can take to improve your website’s E-A-T include showcasing the expertise of your content creators, publishing authoritative and accurate content, providing clear and transparent information about your business, and building a positive online reputation through reviews, testimonials, and other forms of social proof.

It’s important to note that E-A-T is just one of many factors that Google uses to determine search rankings, and it’s not the only factor. Other factors that can influence search rankings include content relevance, website speed and performance, user experience, and backlinks.

Just another E, for Experience. 2023 Google wants to see that a content creator has first-hand, real-world experience with the topic discussed. Which means that content and there creators are becoming far more entwined. Further pushing the trend for authoritative quality content for audiences.

So back to a solid author bios, detailed author pages and all relevant links that detail an authors expertise. Thank you! Search is really shifting and things are changing rapidly. Real content is queen or king again :)

Enjoy coding …

A while back a potential customer asked me, if it is possible to restructure a WordPress Multisite setup and WPML with a more simplified and custom url structure?

web.site/de/

web.site/en/

Languages would normally be added like this:

web.site/nl-nl/de/

web.site/nl-nl/en/

The customer wanted it to be restructured / simplified like this:

web.site/de-nl/

web.site/en-nl/

This basically mimics the structure of a single WPML website with custom languages, but with all the benefits of a multisite.

This is nothing that WPML or WordPress Multisite provides out of the box.

I built a prototype setup to make it work.

Not something that I would propose for anyone, as it requires a lot of tweaks for anything that handles dynamic links (plugins, hooks, core systems, page.builder …)

Its doable :)

One thing that needs to be tweaked globally, is the mapping of the new url structure.

So web.site/nl-nl/en/ needs to become web.site/en-nl/

This needs to be handled on the server side, by proxying the original to the new structure.

This can be easily done using Apache or NGINX.

With that web.site/nl-nl/en/ will be proxied to web.site/en-nl/, but any core navigation will not work yet.

This is the fastest solution that I came up with, within the hour I gave myself ;)

There surely are other options, like the core rewrites / restructuring of the core shorturl handling. But these approaches might break things in far more areas.

Using the proxy approach, keeps the core as it is. The solution needs to be as simple as possible, allowing to maintain it in the future :)

Just for the basic setup a couple of hooks are required to make this work, more might be needed depending on the plugins in use.

Here a couple of examples ….

WordPress site_url

|

1 2 3 4 5 6 7 8 9 |

add_filter( 'site_url', 'custom_site_url' ); function custom_site_url( $url ) { if( is_admin() ) // you probably don't want this in admin side return $url; return str_replace( "/nl-nl/en/","/en-nl/", $url); } |

WordPress Nav Links

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

add_filter( 'post_link', 'changePermalinks', 10, 3); add_filter( 'page_link', 'changePermalinks', 10, 3); add_filter( 'post_type_link', 'changePermalinks', 10, 3); add_filter( 'category_link', 'changePermalinks', 11, 3); add_filter( 'tag_link', 'changePermalinks', 10, 3); add_filter( 'author_link', 'changePermalinks', 11, 3); add_filter( 'day_link', 'changePermalinks', 11, 3); add_filter( 'month_link','changePermalinks', 11, 3); add_filter( 'year_link', 'changePermalinks', 11, 3); function changePermalinks( $url, $post, $leavename=false ) { $url = str_replace("/nl-nl/en/","/en-nl/", $url); return $url; } |

WPML

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

add_filter( 'icl_ls_languages', 'wpml_url_fix'); function wpml_url_fix( $languages ) { global $wpml_url_converter; $abs_home = $wpml_url_converter->get_abs_home(); foreach( $languages as $lang => $element ){ $languages[$lang]['url'] = str_replace( "/nl-nl/en/","/en-nl/", $languages[$lang]['url'] ); } return $languages; } |

Rankmath

|

1 2 3 |

add_filter( 'rank_math/frontend/canonical', function( $canonical ) { return str_replace( "/nl-nl/en/","/en-nl/", $canonical); }); |

This will not cover every angle, but will give you a starting point! I love my puzzles and there always is a viable solution :)

Need something similar … get in touch!

When you look at Youtube, Twitter, certain Facebook groups and even some software companies, they are all building up fear for the upcoming / in-progress Google „Helpful Content“ algorithm update.

– Our “helpful content update” launching next week will better surface original, helpful content made by people, for people, rather than content made primarily to gain search traffic. It’s part of a broad effort to show more unique, authentic info in results – Google SearchLiaison@Twitter

Its happening as I write this and its about time!

The goal of this update is to rank websites that publish original and unique content. Content written by real writers and not AIs.

This also downgrades websites, that write about content that is not relevant to their core expertise. So no more content domain dominance, by posting about every possible content angle to lure visitors in.

Also old content, when not updated regularly, will loose prominence.

This all is a plus for the enduser and knowledge seeker. Google has been preparing for this for years now and its not happening suddenly.

Structured data gained more and more importance over the past few years. Google is finally using it to cleanup search!

Hard to tell. But change was needed! There are so many underrated websites out there, that deliver quality content, but never got a chance to bubble up or shine :) This will hopefully get us better search results and better quality control.

LOOKING FORWARD TO THE RESULTS!

Keep on breathing …. ALEX

This is not a tutorial, but more like sharing a nice geeky road-trip ;)

I have a pretty good understanding of the Youtube Data API, as I have actively used it on portalZINE TV in the past, to upload videos and dynamically link them to my local post-types.

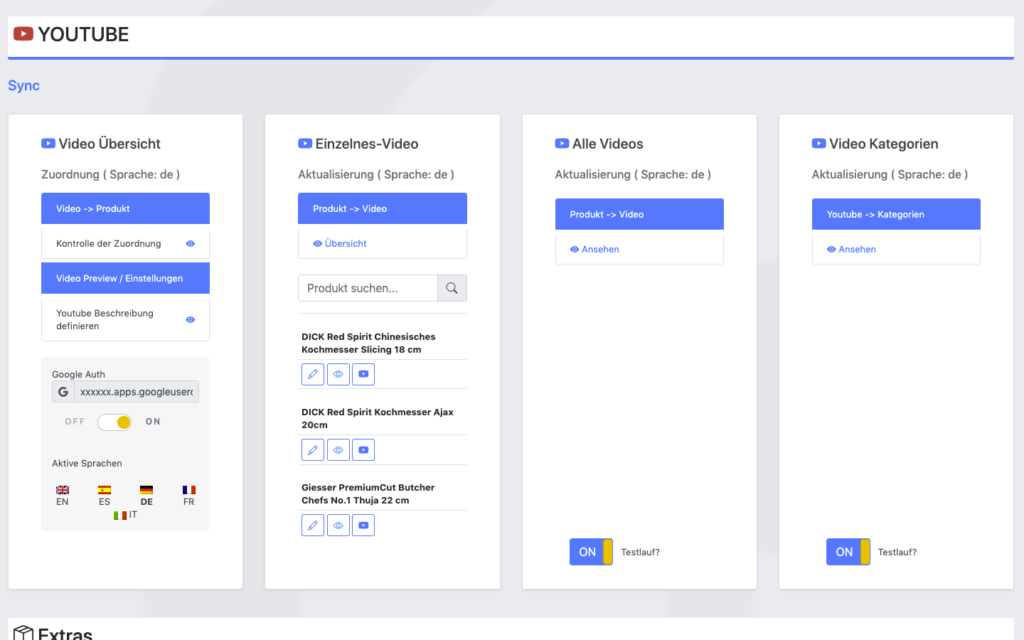

For one of my latest customer projects (TYPEMYKNIFE / typemyknife.com), the task was a bit more complicated and the goal was to make it as future-proof as it can be with the Google APIs :)

Prerequisites / References to get you started:

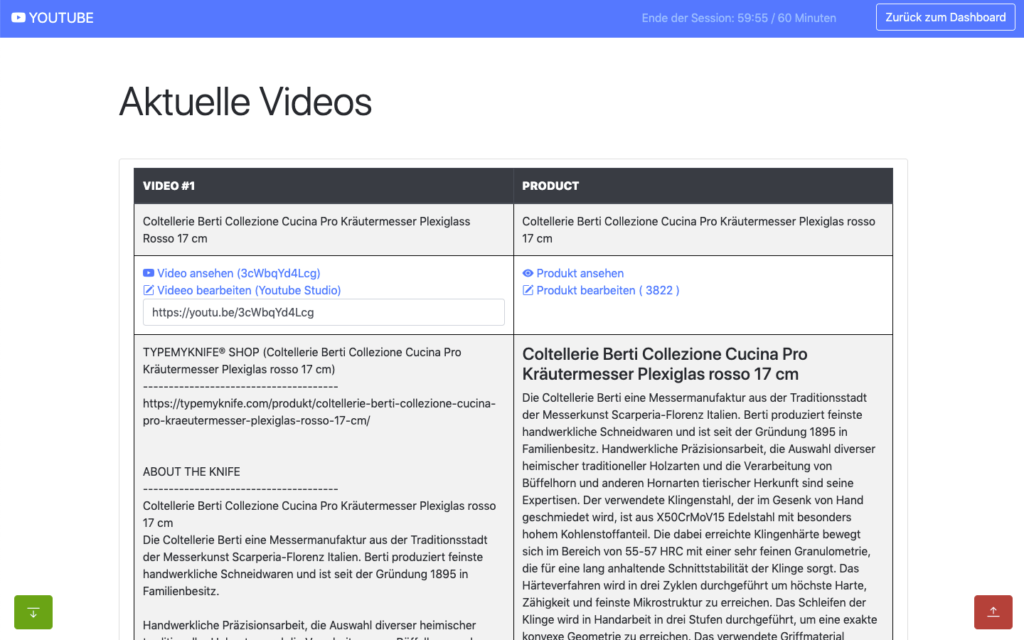

The goal for the setup was to actively synchronize WooCommerce products with linked / attached videos, with their source at Youtube.

As the website is multilingual, WPML integration is critical as well. And as Youtube allows localization of title and description, that can be added into the mix quiet easily in the future ;)

The following product attributes should be mirrored and optimised for Youtube:

The following attributes should be integrated into the description to enrich the Youtube description:

All of these attributes will be collected internally and assigned using a simple template system, which allows the customer to move parts around freely and freely layout the description for Youtube.

The following stats will be collected for review:

Youtube SEO

These are the relevant key aspects, that help to get your videos more views.

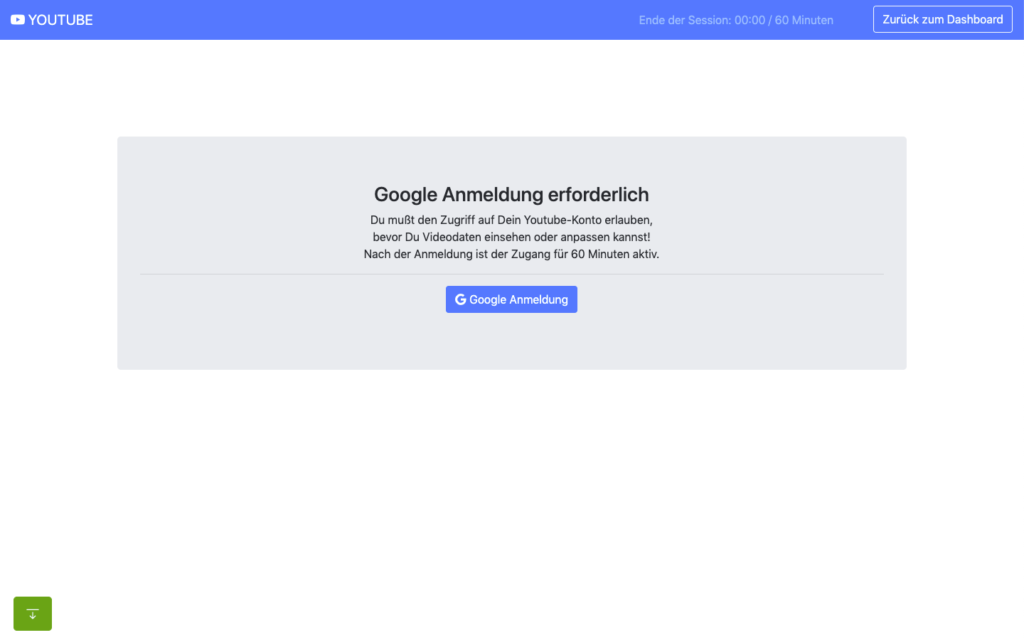

In the past access to the Youtube Data API was far easier and less limited, when it comes to offline / none expiring OAuth2 refresh tokens.

When you are building a server-side application that is only available to your customer or moderators, it makes no sense to run that app through the Google App verification. Your app will never be used in public.

The Youtube Data API and its scopes, are defined as sensitive and therefor require third-party security assessment for public access.

The scopes I am requesting are https://www.googleapis.com/auth/youtube.upload + https://www.googleapis.com/auth/youtube.

Because of that its far easier to just setup OAuth 2 in test mode and restrict access to your customer and specific additional accounts only (up to 100 test users allowed). What all these account need, is access to your own or Brand Youtube Channel.

Preparation in the Google Cloud Console:

A detailed description can be found here.

You can circumvent verification for the consent screen, by using an organisation setup at Google. Here some infos about that. With that setup offline refresh tokens should work fine.

Update: Just tried that, but wont work with a branded youtube account, even though the cloud user has admin access to it. Not giving up yet, but Google / Youtube really makes it difficult to just have a simple offline solution for specific tasks ;) BTW also forced the login hint, to make sure the right account is logged in : $client->setLoginHint(‚YourWoreksapceAccount‘); !

You might have heard of the „The League of Extraordinary Packages„. It is a group of developers who have banded together to build solid, well tested PHP packages using modern coding standards.

They also offer an OAuth2-client + OAuth2 Google extension that can be used.

On the server, the Google API PHP SDK can be easily integrated using Composer.

In my customer plugin I neatly separated all relevant areas in classes & traits:

You can check the expiry time of your access token by accessing:

https://www.googleapis.com/oauth2/v1/tokeninfo?access_token=YOUR_TOKEN„A Google Cloud Platform project with an OAuth consent screen configured for an external user type and a publishing status of „Testing“ is issued a refresh token expiring in 7 days.“ – Google

Basic Auth example from the SDK:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 |

<?php // Call set_include_path() as needed to point to your client library. set_include_path($_SERVER['DOCUMENT_ROOT'] . '/directory/to/google/api/'); require_once 'Google/Client.php'; require_once 'Google/Service/YouTube.php'; session_start(); /* * You can acquire an OAuth 2.0 client ID and client secret from the * {{ Google Cloud Console }} <{{ https://cloud.google.com/console }}> * For more information about using OAuth 2.0 to access Google APIs, please see: * <https://developers.google.com/youtube/v3/guides/authentication> * Please ensure that you have enabled the YouTube Data API for your project. */ $OAUTH2_CLIENT_ID = 'XXXXXXX.apps.googleusercontent.com'; $OAUTH2_CLIENT_SECRET = 'XXXXXXXXXX'; $REDIRECT = 'http://localhost/oauth2callback.php'; $APPNAME = "XXXXXXXXX"; $client = new Google_Client(); $client->setClientId($OAUTH2_CLIENT_ID); $client->setClientSecret($OAUTH2_CLIENT_SECRET); $client->setScopes('https://www.googleapis.com/auth/youtube'); $client->setRedirectUri($REDIRECT); $client->setApplicationName($APPNAME); $client->setAccessType('offline'); // Define an object that will be used to make all API requests. $youtube = new Google_Service_YouTube($client); if (isset($_GET['code'])) { if (strval($_SESSION['state']) !== strval($_GET['state'])) { die('The session state did not match.'); } $client->authenticate($_GET['code']); $_SESSION['token'] = $client->getAccessToken(); } if (isset($_SESSION['token'])) { $client->setAccessToken($_SESSION['token']); echo '<code>' . $_SESSION['token'] . '</code>'; } // Check to ensure that the access token was successfully acquired. if ($client->getAccessToken()) { try { // Call the channels.list method to retrieve information about the // currently authenticated user's channel. $channelsResponse = $youtube->channels->listChannels('contentDetails', array( 'mine' => 'true', )); $htmlBody = ''; foreach ($channelsResponse['items'] as $channel) { // Extract the unique playlist ID that identifies the list of videos // uploaded to the channel, and then call the playlistItems.list method // to retrieve that list. $uploadsListId = $channel['contentDetails']['relatedPlaylists']['uploads']; $playlistItemsResponse = $youtube->playlistItems->listPlaylistItems('snippet', array( 'playlistId' => $uploadsListId, 'maxResults' => 50 )); $htmlBody .= "<h3>Videos in list $uploadsListId</h3><ul>"; foreach ($playlistItemsResponse['items'] as $playlistItem) { $htmlBody .= sprintf('<li>%s (%s)</li>', $playlistItem['snippet']['title'], $playlistItem['snippet']['resourceId']['videoId']); } $htmlBody .= '</ul>'; } } catch (Google_ServiceException $e) { $htmlBody .= sprintf('<p>A service error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } catch (Google_Exception $e) { $htmlBody .= sprintf('<p>An client error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } $_SESSION['token'] = $client->getAccessToken(); } else { $state = mt_rand(); $client->setState($state); $_SESSION['state'] = $state; $authUrl = $client->createAuthUrl(); $htmlBody = <<<END <h3>Authorization Required</h3> <p>You need to <a href="$authUrl">authorise access</a> before proceeding.<p> END; } ?> <!doctype html> <html> <head> <title>My Uploads</title> </head> <body> <?php echo $htmlBody?> </body> </html> |

A simple upload example can be found here .

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

try{ // REPLACE this value with the video ID of the video being updated. $videoId = "VIDEO_ID"; // Call the API's videos.list method to retrieve the video resource. $listResponse = $youtube->videos->listVideos("snippet", array('id' => $videoId)); // If $listResponse is empty, the specified video was not found. if (empty($listResponse)) { $htmlBody .= sprintf('<h3>Can\'t find a video with video id: %s</h3>', $videoId); } else { // Since the request specified a video ID, the response only // contains one video resource. $video = $listResponse[0]; $videoSnippet = $video['snippet']; $tags = $videoSnippet['tags']; // Preserve any tags already associated with the video. If the video does // not have any tags, create a new list. Replace the values "tag1" and // "tag2" with the new tags you want to associate with the video. if (is_null($tags)) { $tags = array("tag1", "tag2"); } else { array_push($tags, "tag1", "tag2"); } // Set the tags array for the video snippet $videoSnippet['tags'] = $tags; // Update the video resource by calling the videos.update() method. $updateResponse = $youtube->videos->update("snippet", $video); $responseTags = $updateResponse['snippet']['tags']; $htmlBody .= "<h3>Video Updated</h3><ul>"; $htmlBody .= sprintf('<li>Tags "%s" and "%s" added for video %s (%s) </li>', array_pop($responseTags), array_pop($responseTags), $videoId, $video['snippet']['title']); $htmlBody .= '</ul>'; } } catch (Google_Service_Exception $e) { $htmlBody .= sprintf('<p>A service error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } catch (Google_Exception $e) { $htmlBody .= sprintf('<p>An client error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } |

All operations to and from the Youtube Data API are rate limited. What is important for us, are the queries per day.

The default quota is 10.000 queries per day, sounds a lot, but is easily gone after updating 150-200 videos. You can request this limit to be raised, but again a lot of paperwork and questions that are just not needed.

The above limit just means, that you need to cache as many queries as possible, to only query live when needed ;)

Something you learn fast, when experimenting with different things! I hit that limit multiple times in the first few days, with around 500 videos in the queue.

Different operation cost you different amount of units

It also helps to use the Google Developer Playground to testdrive the Youtube Data API with your own credentials while optimising your own code.

You can define your own OAuth 2.0 configuration by clicking the cog in the upper right corner.

I setup the bulk updating to allow splitting it over multiple days, if required. For this an offline refresh token is needed, as the standard token expires after 60 minutes.

My customer can also just update a single video, when changes are applied to the product or a new product has been added.

If more frequent updates are required, I will ask for a raise of the queries per day. You can circumvent the limit by using multiple Google Cloud Platform accounts with new OAuth credentials, but really an overkill right now. I have done that in the past ;)

The GUI is just based of Bootstrap, to make it simple and clean. Using my own wrapper to make it work within the WordPress admin.

For all ajax operations, I am using htmx and _hyperscript, which I will talk about in another article in the future.

Really neat and clean way to build single page interfaces.

The whole plugin runs of its own REST API endpoint. Just love using WordPress as a headless system.

I used TWIG / Timber for the templates, to separate logic and layout. Timber has been my goto solution for years now. It drives my own and many customer websites.

This has been a lot of fun, maybe a bit too much LOL

I do geek-out about many of my projects, but this experience helped me to bring my WordPress toolbox to the next level. This will help to drive other things in the future.

Working so deeply with the Youtube Data API has been fun and feels so easy now, after all remaining problems have been solved.

Would have loved this during my portalZINE TV days ;)

I you read all this, you just earned yourself a badge for completion ;)

Need something similar or something else? Just say hi and we can talk.

„WPML (WordPress Multilingual) makes it easy to build multilingual sites and run them. It’s powerful enough for corporate sites, yet simple for blogs.“ – WPML

I have been running and setting up multilingual websites for more than 12 years. WordPress and related integrations have gladly come a long way to make our life’s a lot easier.

For basic content WPML is almost plug & play, but I do see more and more sites / customers struggling with more complex setups. WPML is one of the most popular multilingual plugins and is used on x00.000 of websites.

Just so you know, WPML is a commercial solution!

The amount of settings has increased a lot over the years and offers possible solutions for almost any content / plugin setup.

But for more complex setups, I would suggest to hire a professional to look over the settings or study the plugin documentation carefully.

Especially with a lot of content, it can quickly increase problems and the need to revisit specific content over and over again.

WPML lets you translate any text that comes from themes / theme frameworks (DIVI, Elemetor, Gutenberg …), plugins, menus, slugs, SEO and additionally supported integrations (Gravity Forms, ACF, WooCommerce …).

You can translate content internally for yourself, using translation management to translate with an internal team of translators or get help from external translators / translation services.

The latest version also offers AI translations, which allows you to get a decent start for most of your content.

In addition to the above, WPML String Translation allows you to translate texts that are not in posts, pages and taxonomy. This includes the site’s tagline, general texts in admin screens, widget titles and many other areas.

Well, I am a bit biased. I have not looked much at other solutions for the past 5 years, as it offers all I really need.

I have used it on projects from 2 to 15 languages and it scales nicely. At least with proper hosting attached!

Anything can be tweaked through the API, Hooks and custom integrations. I have build additional WPML tools for my customers, to streamline some of the repeating / boring tasks.

Their support is responsive and the forum already provides a huge amount of answers to most of the questions that might come up.

If you develop / maintain multiple customer websites with multilingual content, the investment is quickly

amortized. I do offer WPML to my maintenance package customers, maybe something to consider ;)

Its an essential solution in my WP toolbox.

WPML 4.5 is on its way and will include a „Translate Everything“ feature, among other fixes and enhancements.

Translate Everything allows you to translate all of your site’s content automatically as you create it. You can then review the translations on the front-end before publishing.

You might have heard about Structured Data, Schema.org and JSON-LD.

Search engines read structured data and use it to enhance search engine results. Structured data helps search engines to understand and categorize page content.

This structured data, in JSON-LD format, describes a simple Article.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

{ "@context": "http://schema.org", "@type": "Article", "author": "John Doe", "interactionStatistic": [ { "@type": "InteractionCounter", "interactionService": { "@type": "WebSite", "name": "Twitter", "url": "http://www.twitter.com" }, "interactionType": "http://schema.org/ShareAction", "userInteractionCount": "1203" }, { "@type": "InteractionCounter", "interactionType": "http://schema.org/CommentAction", "userInteractionCount": "78" } ], "name": "How to Tie a Reef Knot" } |

Schema.org is a collaborative, community activity with a mission to create, maintain, and promote schemas for structured data on the Internet. But not all structured data endpoints are actually used by Google, Bing or other search engines yet.

Google provides a detailed overview of structured data allowed and used for search results.

There are basic enhancements you can use, like the Article structured data above. There are also many other more specific uses, like Video, LocalBusiness, Events, FAQ, Job Postings, Recipe and so on. Bing also provides a basic overview, but their documentation is scattered and feels incomplete.

If you use a modern CMS, many structured data endpoints are already integrated out of the box (Article, Website, Logo, Person …).

Also modular content management systems often offer additional functionality through plugins, those help integrate structured data directly. Some do it better than others!

But if you really want to dive deep and integrate all those little things, structured data is still far more powerful when added manually. Especially things like events, products, job listings, courses, Q&A can greatly be enhanced by hand.

Alex@portalZINE

Google and Bing offer validation tools for structured data. Both integrate it into their Webmaster Tools. You can also use the JSON-LD Playground to validate the JSON-LD itself or RDFa Play, Structured Data Linter, Facebook Debugger, Schema.org Generator and many other tools.

I am a huge structured data fan and have been working with it for years now. I am constantly looking for new supported structured data endpoints, to enhance my own or customer websites & data.

Google constantly updates their documentation and highlights experimental structured data endpoints. Like Speakable for example, that highlights sections of a websites that are best suited for audio playback.

Fresh structured data helps to promote your content and enhance SEO, directly enhancing your discoverability and your search engine position. Your content becomes more meaningful for search engines, making it easier for them to promote it to the right potential user. It also ties into the GO GREEN concept, as you are reducing bounces of your website for users getting offered the wrong content.

Things like recipes and how-tos are already pushed to the top of the search index. A perfect way to promote your website and get noticed.