This is not a tutorial, but more like sharing a nice geeky road-trip ;)

I have a pretty good understanding of the Youtube Data API, as I have actively used it on portalZINE TV in the past, to upload videos and dynamically link them to my local post-types.

For one of my latest customer projects (TYPEMYKNIFE / typemyknife.com), the task was a bit more complicated and the goal was to make it as future-proof as it can be with the Google APIs :)

Prerequisites / References to get you started:

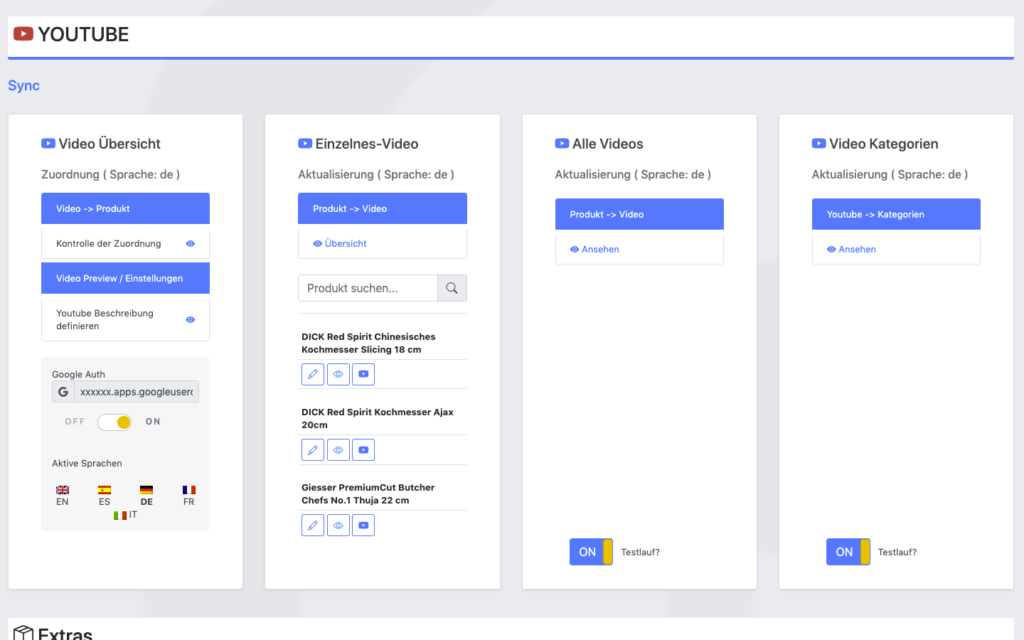

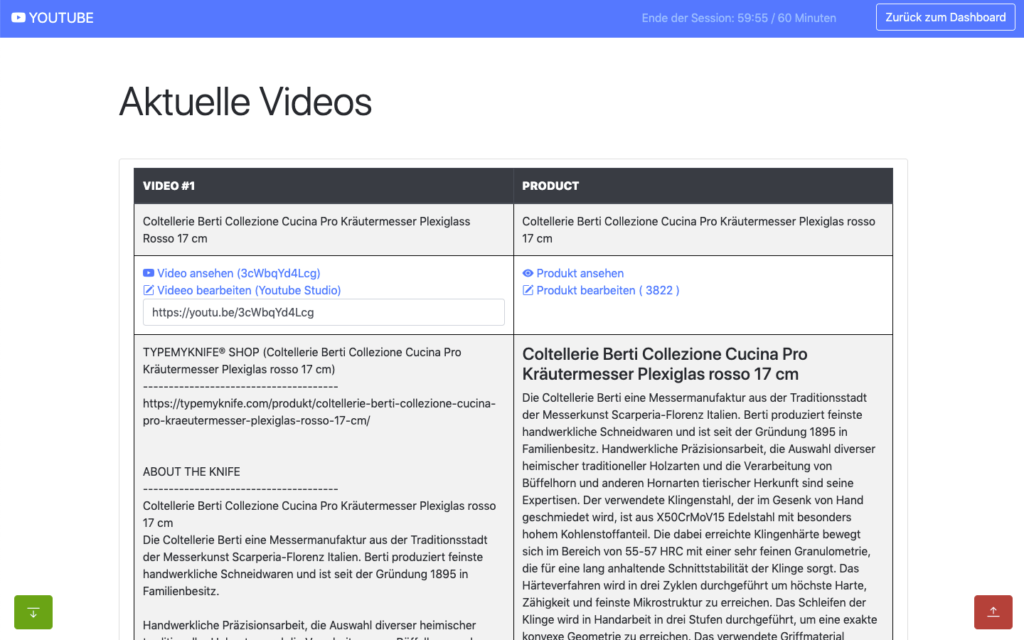

The goal for the setup was to actively synchronize WooCommerce products with linked / attached videos, with their source at Youtube.

As the website is multilingual, WPML integration is critical as well. And as Youtube allows localization of title and description, that can be added into the mix quiet easily in the future ;)

The following product attributes should be mirrored and optimised for Youtube:

The following attributes should be integrated into the description to enrich the Youtube description:

All of these attributes will be collected internally and assigned using a simple template system, which allows the customer to move parts around freely and freely layout the description for Youtube.

The following stats will be collected for review:

Youtube SEO

These are the relevant key aspects, that help to get your videos more views.

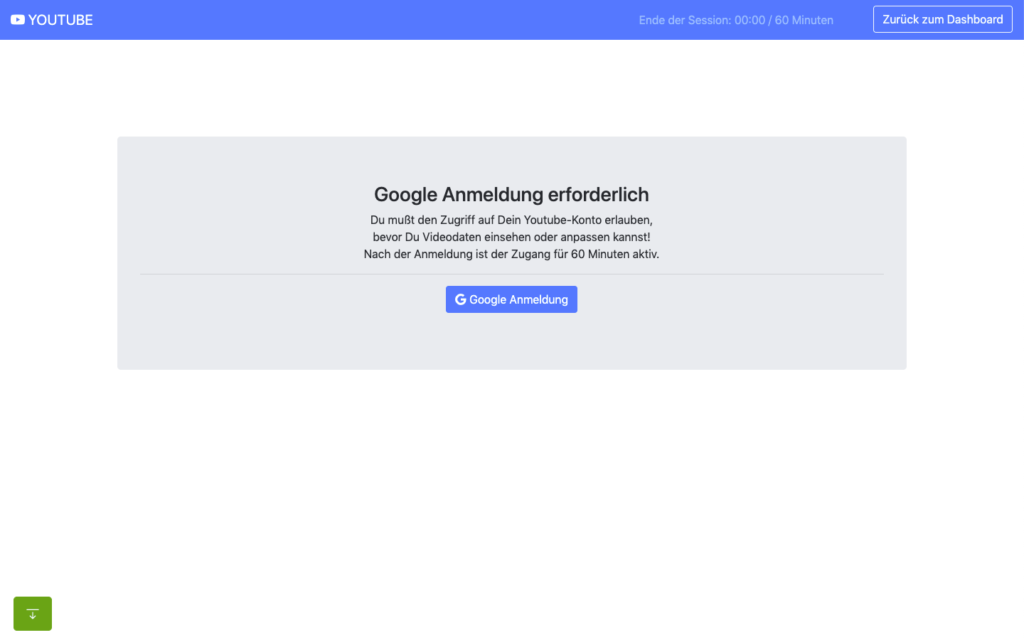

In the past access to the Youtube Data API was far easier and less limited, when it comes to offline / none expiring OAuth2 refresh tokens.

When you are building a server-side application that is only available to your customer or moderators, it makes no sense to run that app through the Google App verification. Your app will never be used in public.

The Youtube Data API and its scopes, are defined as sensitive and therefor require third-party security assessment for public access.

The scopes I am requesting are https://www.googleapis.com/auth/youtube.upload + https://www.googleapis.com/auth/youtube.

Because of that its far easier to just setup OAuth 2 in test mode and restrict access to your customer and specific additional accounts only (up to 100 test users allowed). What all these account need, is access to your own or Brand Youtube Channel.

Preparation in the Google Cloud Console:

A detailed description can be found here.

You can circumvent verification for the consent screen, by using an organisation setup at Google. Here some infos about that. With that setup offline refresh tokens should work fine.

Update: Just tried that, but wont work with a branded youtube account, even though the cloud user has admin access to it. Not giving up yet, but Google / Youtube really makes it difficult to just have a simple offline solution for specific tasks ;) BTW also forced the login hint, to make sure the right account is logged in : $client->setLoginHint(‚YourWoreksapceAccount‘); !

You might have heard of the „The League of Extraordinary Packages„. It is a group of developers who have banded together to build solid, well tested PHP packages using modern coding standards.

They also offer an OAuth2-client + OAuth2 Google extension that can be used.

On the server, the Google API PHP SDK can be easily integrated using Composer.

In my customer plugin I neatly separated all relevant areas in classes & traits:

You can check the expiry time of your access token by accessing:

https://www.googleapis.com/oauth2/v1/tokeninfo?access_token=YOUR_TOKEN„A Google Cloud Platform project with an OAuth consent screen configured for an external user type and a publishing status of „Testing“ is issued a refresh token expiring in 7 days.“ – Google

Basic Auth example from the SDK:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 |

<?php // Call set_include_path() as needed to point to your client library. set_include_path($_SERVER['DOCUMENT_ROOT'] . '/directory/to/google/api/'); require_once 'Google/Client.php'; require_once 'Google/Service/YouTube.php'; session_start(); /* * You can acquire an OAuth 2.0 client ID and client secret from the * {{ Google Cloud Console }} <{{ https://cloud.google.com/console }}> * For more information about using OAuth 2.0 to access Google APIs, please see: * <https://developers.google.com/youtube/v3/guides/authentication> * Please ensure that you have enabled the YouTube Data API for your project. */ $OAUTH2_CLIENT_ID = 'XXXXXXX.apps.googleusercontent.com'; $OAUTH2_CLIENT_SECRET = 'XXXXXXXXXX'; $REDIRECT = 'http://localhost/oauth2callback.php'; $APPNAME = "XXXXXXXXX"; $client = new Google_Client(); $client->setClientId($OAUTH2_CLIENT_ID); $client->setClientSecret($OAUTH2_CLIENT_SECRET); $client->setScopes('https://www.googleapis.com/auth/youtube'); $client->setRedirectUri($REDIRECT); $client->setApplicationName($APPNAME); $client->setAccessType('offline'); // Define an object that will be used to make all API requests. $youtube = new Google_Service_YouTube($client); if (isset($_GET['code'])) { if (strval($_SESSION['state']) !== strval($_GET['state'])) { die('The session state did not match.'); } $client->authenticate($_GET['code']); $_SESSION['token'] = $client->getAccessToken(); } if (isset($_SESSION['token'])) { $client->setAccessToken($_SESSION['token']); echo '<code>' . $_SESSION['token'] . '</code>'; } // Check to ensure that the access token was successfully acquired. if ($client->getAccessToken()) { try { // Call the channels.list method to retrieve information about the // currently authenticated user's channel. $channelsResponse = $youtube->channels->listChannels('contentDetails', array( 'mine' => 'true', )); $htmlBody = ''; foreach ($channelsResponse['items'] as $channel) { // Extract the unique playlist ID that identifies the list of videos // uploaded to the channel, and then call the playlistItems.list method // to retrieve that list. $uploadsListId = $channel['contentDetails']['relatedPlaylists']['uploads']; $playlistItemsResponse = $youtube->playlistItems->listPlaylistItems('snippet', array( 'playlistId' => $uploadsListId, 'maxResults' => 50 )); $htmlBody .= "<h3>Videos in list $uploadsListId</h3><ul>"; foreach ($playlistItemsResponse['items'] as $playlistItem) { $htmlBody .= sprintf('<li>%s (%s)</li>', $playlistItem['snippet']['title'], $playlistItem['snippet']['resourceId']['videoId']); } $htmlBody .= '</ul>'; } } catch (Google_ServiceException $e) { $htmlBody .= sprintf('<p>A service error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } catch (Google_Exception $e) { $htmlBody .= sprintf('<p>An client error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } $_SESSION['token'] = $client->getAccessToken(); } else { $state = mt_rand(); $client->setState($state); $_SESSION['state'] = $state; $authUrl = $client->createAuthUrl(); $htmlBody = <<<END <h3>Authorization Required</h3> <p>You need to <a href="$authUrl">authorise access</a> before proceeding.<p> END; } ?> <!doctype html> <html> <head> <title>My Uploads</title> </head> <body> <?php echo $htmlBody?> </body> </html> |

A simple upload example can be found here .

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

try{ // REPLACE this value with the video ID of the video being updated. $videoId = "VIDEO_ID"; // Call the API's videos.list method to retrieve the video resource. $listResponse = $youtube->videos->listVideos("snippet", array('id' => $videoId)); // If $listResponse is empty, the specified video was not found. if (empty($listResponse)) { $htmlBody .= sprintf('<h3>Can\'t find a video with video id: %s</h3>', $videoId); } else { // Since the request specified a video ID, the response only // contains one video resource. $video = $listResponse[0]; $videoSnippet = $video['snippet']; $tags = $videoSnippet['tags']; // Preserve any tags already associated with the video. If the video does // not have any tags, create a new list. Replace the values "tag1" and // "tag2" with the new tags you want to associate with the video. if (is_null($tags)) { $tags = array("tag1", "tag2"); } else { array_push($tags, "tag1", "tag2"); } // Set the tags array for the video snippet $videoSnippet['tags'] = $tags; // Update the video resource by calling the videos.update() method. $updateResponse = $youtube->videos->update("snippet", $video); $responseTags = $updateResponse['snippet']['tags']; $htmlBody .= "<h3>Video Updated</h3><ul>"; $htmlBody .= sprintf('<li>Tags "%s" and "%s" added for video %s (%s) </li>', array_pop($responseTags), array_pop($responseTags), $videoId, $video['snippet']['title']); $htmlBody .= '</ul>'; } } catch (Google_Service_Exception $e) { $htmlBody .= sprintf('<p>A service error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } catch (Google_Exception $e) { $htmlBody .= sprintf('<p>An client error occurred: <code>%s</code></p>', htmlspecialchars($e->getMessage())); } |

All operations to and from the Youtube Data API are rate limited. What is important for us, are the queries per day.

The default quota is 10.000 queries per day, sounds a lot, but is easily gone after updating 150-200 videos. You can request this limit to be raised, but again a lot of paperwork and questions that are just not needed.

The above limit just means, that you need to cache as many queries as possible, to only query live when needed ;)

Something you learn fast, when experimenting with different things! I hit that limit multiple times in the first few days, with around 500 videos in the queue.

Different operation cost you different amount of units

It also helps to use the Google Developer Playground to testdrive the Youtube Data API with your own credentials while optimising your own code.

You can define your own OAuth 2.0 configuration by clicking the cog in the upper right corner.

I setup the bulk updating to allow splitting it over multiple days, if required. For this an offline refresh token is needed, as the standard token expires after 60 minutes.

My customer can also just update a single video, when changes are applied to the product or a new product has been added.

If more frequent updates are required, I will ask for a raise of the queries per day. You can circumvent the limit by using multiple Google Cloud Platform accounts with new OAuth credentials, but really an overkill right now. I have done that in the past ;)

The GUI is just based of Bootstrap, to make it simple and clean. Using my own wrapper to make it work within the WordPress admin.

For all ajax operations, I am using htmx and _hyperscript, which I will talk about in another article in the future.

Really neat and clean way to build single page interfaces.

The whole plugin runs of its own REST API endpoint. Just love using WordPress as a headless system.

I used TWIG / Timber for the templates, to separate logic and layout. Timber has been my goto solution for years now. It drives my own and many customer websites.

This has been a lot of fun, maybe a bit too much LOL

I do geek-out about many of my projects, but this experience helped me to bring my WordPress toolbox to the next level. This will help to drive other things in the future.

Working so deeply with the Youtube Data API has been fun and feels so easy now, after all remaining problems have been solved.

Would have loved this during my portalZINE TV days ;)

I you read all this, you just earned yourself a badge for completion ;)

Need something similar or something else? Just say hi and we can talk.

„WPML (WordPress Multilingual) makes it easy to build multilingual sites and run them. It’s powerful enough for corporate sites, yet simple for blogs.“ – WPML

I have been running and setting up multilingual websites for more than 12 years. WordPress and related integrations have gladly come a long way to make our life’s a lot easier.

For basic content WPML is almost plug & play, but I do see more and more sites / customers struggling with more complex setups. WPML is one of the most popular multilingual plugins and is used on x00.000 of websites.

Just so you know, WPML is a commercial solution!

The amount of settings has increased a lot over the years and offers possible solutions for almost any content / plugin setup.

But for more complex setups, I would suggest to hire a professional to look over the settings or study the plugin documentation carefully.

Especially with a lot of content, it can quickly increase problems and the need to revisit specific content over and over again.

WPML lets you translate any text that comes from themes / theme frameworks (DIVI, Elemetor, Gutenberg …), plugins, menus, slugs, SEO and additionally supported integrations (Gravity Forms, ACF, WooCommerce …).

You can translate content internally for yourself, using translation management to translate with an internal team of translators or get help from external translators / translation services.

The latest version also offers AI translations, which allows you to get a decent start for most of your content.

In addition to the above, WPML String Translation allows you to translate texts that are not in posts, pages and taxonomy. This includes the site’s tagline, general texts in admin screens, widget titles and many other areas.

Well, I am a bit biased. I have not looked much at other solutions for the past 5 years, as it offers all I really need.

I have used it on projects from 2 to 15 languages and it scales nicely. At least with proper hosting attached!

Anything can be tweaked through the API, Hooks and custom integrations. I have build additional WPML tools for my customers, to streamline some of the repeating / boring tasks.

Their support is responsive and the forum already provides a huge amount of answers to most of the questions that might come up.

If you develop / maintain multiple customer websites with multilingual content, the investment is quickly

amortized. I do offer WPML to my maintenance package customers, maybe something to consider ;)

Its an essential solution in my WP toolbox.

WPML 4.5 is on its way and will include a „Translate Everything“ feature, among other fixes and enhancements.

Translate Everything allows you to translate all of your site’s content automatically as you create it. You can then review the translations on the front-end before publishing.

VR is a new passion of mine, that I play with in my freetime, but also explore as a developer and tech enthusiast.

As video quality has evolved a lot in the past 2 years, the big topic now is full body immersion.

The following things are becoming more important:

I will use this article to collect things that are already available, diy projects, experiments and things that are in their early stages.

Hand & finger tracking is already making its way into consumer products. It is still not widely integrated, but has made big jumps the past year.

Eye tracking is not only important to make avatars more life-like, but also to track your eye focus and help to reduce processor load.

Lip tracking has made a big jump, with the new Vive Lip tracker and is important for social interactions.

Body tracking is one of the areas, that has so many projects attached to it. There are so many neat solutions out there, that almost anyone can use it by now.

Free locomotion is one of the biggest challenges in VR right now. You can increase your playarea, but that is still limiting and requires space. Their are VR treadmills, but none of them really reproduces life-like natural movement yet. And those solutions that come close, are still out of your reach. Most of the tracking above is somehow covered and will be available soon, while real locomotion is really the hardest to solve of them all.

Always crucial to have the right look for yourself in VR :)

„Klaro [klɛro] is a simple consent management platform (CMP) and privacy tool that helps you to be transparent about the third-party applications on your website. It is designed to be extremely simple, intuitive and easy to use while allowing you to be compliant with all relevant regulations (notably GDPR and ePrivacy).“

The tool is developed by KIPROTECT and can be found on Github.

As I integrated Klaro on a couple of websites so far, I decided to make my work a bit easier and start building some basic clean themes for it.

I have a basic white and black&white theme so far. The download includes a testdrive folder, to showcase the themes. The white theme is also used on this website ;)

I really hate those standard consent management modals, that integrate badly into the website native design.

Klaro does a good job allowing to override its core theme and makes it a bit more pleasant. We do have to live with those modals from now on ;)

The themes are Sass-based and provide easy configuration options.

Enjoy!

cubicFUSION Themes for Klaro! @ Github

Klaro is still missing some things, will collect some workarounds here for you to play with.

Updates: Github Discussion

– 0.7.10 also adds custom callbacks to services (onAccept, onDecline, onInit) NICE!

Used a simple MutationObserver to do some magic for now, without diving into the core Klaro code for now. I am sure they already have an event listener or watcher setup.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

var cm_event = document.createEvent('Event'); cm_event.initEvent('consentModalOpen', true, true); document.addEventListener('consentModalOpen', function (e) { console.log("consentModalOpen") }, false); document.addEventListener("DOMContentLoaded", function(e) { var cm_target = document.getElementById( "klaro" ), cm_visible = false; var observer = new MutationObserver(function(mutationRecords) { if(document.querySelectorAll('#klaro .cookie-modal').length > 0 && cm_visible == false){ cm_visible = true; document.dispatchEvent(cm_event); }else{ cm_visible = false; } }); observer.observe(cm_target, { childList: true, subtree: true, characterDataOldValue: true }); }); |

Admin Enhancer is the first free plugin released under the cubicFUSION brand. The plugin is still work in progress, but a tool that is already used within some of my client projects. I am using this plugin to centralise things I love & need, when sending out a finished website or project.

NEW: DASHBOARD GUTENBERG / DASHBOARD TEMPLATES

NEW: ADMIN TOOLBAR

UPDATE: SHORTCODES

This version includes a new addon “GUTENBERG DASHBOARD“, that allows you to build a White-Label Admin Dashboards using the Gutenberg Editor.

It integrates with the SHORTCODES addon and allows to drop in the dashboard widgets via its own Gutenberg Block.

The Block provides settings to overwrite CSS from the admin widgets, allowing you tweak them a bit — for better visual integration. The Dashboard template itself can be tweaked using CSS and Sass via SCSS now 😉

I am also releasing the first integration of the “ADMIN TOOLBAR” addon, which allows you to tweak some of the admin toolbar and footer options (Hide WP Logo, Hide Toolbar on Frontend, Hide Menu Items ..)

Already working on 0.3 … ENJOY!

You might have heard about Structured Data, Schema.org and JSON-LD.

Search engines read structured data and use it to enhance search engine results. Structured data helps search engines to understand and categorize page content.

This structured data, in JSON-LD format, describes a simple Article.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

{ "@context": "http://schema.org", "@type": "Article", "author": "John Doe", "interactionStatistic": [ { "@type": "InteractionCounter", "interactionService": { "@type": "WebSite", "name": "Twitter", "url": "http://www.twitter.com" }, "interactionType": "http://schema.org/ShareAction", "userInteractionCount": "1203" }, { "@type": "InteractionCounter", "interactionType": "http://schema.org/CommentAction", "userInteractionCount": "78" } ], "name": "How to Tie a Reef Knot" } |

Schema.org is a collaborative, community activity with a mission to create, maintain, and promote schemas for structured data on the Internet. But not all structured data endpoints are actually used by Google, Bing or other search engines yet.

Google provides a detailed overview of structured data allowed and used for search results.

There are basic enhancements you can use, like the Article structured data above. There are also many other more specific uses, like Video, LocalBusiness, Events, FAQ, Job Postings, Recipe and so on. Bing also provides a basic overview, but their documentation is scattered and feels incomplete.

If you use a modern CMS, many structured data endpoints are already integrated out of the box (Article, Website, Logo, Person …).

Also modular content management systems often offer additional functionality through plugins, those help integrate structured data directly. Some do it better than others!

But if you really want to dive deep and integrate all those little things, structured data is still far more powerful when added manually. Especially things like events, products, job listings, courses, Q&A can greatly be enhanced by hand.

Alex@portalZINE

Google and Bing offer validation tools for structured data. Both integrate it into their Webmaster Tools. You can also use the JSON-LD Playground to validate the JSON-LD itself or RDFa Play, Structured Data Linter, Facebook Debugger, Schema.org Generator and many other tools.

I am a huge structured data fan and have been working with it for years now. I am constantly looking for new supported structured data endpoints, to enhance my own or customer websites & data.

Google constantly updates their documentation and highlights experimental structured data endpoints. Like Speakable for example, that highlights sections of a websites that are best suited for audio playback.

Fresh structured data helps to promote your content and enhance SEO, directly enhancing your discoverability and your search engine position. Your content becomes more meaningful for search engines, making it easier for them to promote it to the right potential user. It also ties into the GO GREEN concept, as you are reducing bounces of your website for users getting offered the wrong content.

Things like recipes and how-tos are already pushed to the top of the search index. A perfect way to promote your website and get noticed.

Together with my partners in crime (Dorit & Micha), we have finally opened our own personal online store.

We have been selling our single origin coffees (1st Single Malt Whisky Coffee, Basic – Single Origin Arabica, Kill me Quick Espresso -Single Origin Robusta), teas (Kräuterschorle – Kräutertee, Feuerkieker – Schwarztee) and rum (Fortune Teller – Double Aged Barbados Rum) using the Amazon Marketplace for the past 2 years.

GreenApe has been a side project for the past years and I never wanted to deal with the maintenance of our own store. But its time to move on and do our own thing. Amazon has removed so many useful features over the years or added a new fee on top of other fees. Even though Amazon provides access to a large amount of customers, for small companies the fees build up quickly.

With our own store we can finally do bundles, coupons again and better optimized shipping. It will also allow me to better testdrive some new interesting features for my customers ;) Yeah its kind of my new toy or shopping lab! Its fun being able to work on untested new SEO features, structured data, merchant tools, shopping ads and tracking of all of those.

We have been selling in Germany for the past 2 years, but that might be changing in the future depending how well the new store shapes up :)

If you live in Germany, love good coffee, tee or rum … say Hi!

GreenApe – Makes Your Life Better

Homepage

Shop

Contact us

Development today relies on multiple teams, services, and environments all working in unison. A topic that always comes up, when setting up a new development environment: How do we secure important credentials, while not making it too complicated for the rest of the team?

The key when working with version control systems like Git, is to keep any type of credentials out of the versioning system. These can be API keys, database or email passwords.

Even if its a private repository, development environments might change. It can be a simple staging & live website setup you are maintaining.

|

1 2 3 |

DB_HOST=localhost DB_USER=username DB_PASS=password |

The simplest way in PHP is to use .env files to store your credentials outside of the public accessible directory structure. So outside the public_html, but still within the reach of the executing environment to read it. Variables are accessible through $_ENV['yourVar'] or getenv("yourVar"), once included in your code.

To make it simple you can use the popular package vlucas/phpdotenv, which reads and imports the file automatically.

|

1 2 3 4 5 6 |

<?php require_once __DIR__.'/../vendor/autoload.php'; $dotenv = new Dotenv\Dotenv(__DIR__.'/../'); $dotenv->load(); ?> |

Don’t fool yourself, if an attacker finds a way into your system, these variables can be easily read. This is just hiding the file from public access and provides some convenience while developing or sharing code.

Some people propose to encrypt / decrypt environment variables using a secret key. But if an attacker can access your data, he can also find the secret key.

There are some nice packages that offer just that. You have to decide if those fit your ammo.

psecio/secure_dotenv library provides an easy way to handle the encryption and decryption of the information in your .envfile. @Githubjohnathanmiller/secure-env-php – Env encryption and decryption library. Prevent committing and exposing vulnerable plain-text environment variables in production environments. The lib provides a nice guided interface to encrypt your .env file. @Github beyondcode/laravel-credential – Add encrypted credentials to your Laravel production environment. You can edit and encrypt using php artisan credentials:edit. @GithubThe Apache2 environment variables are set in the /etc/apache2/envvars file. These variables are not the same as the environment variables of your Linux system; they are stored and manipulated in an internal Apache structure.

The /etc/apache2/envvars file holds variable definitions such as APACHE_LOG_DIR (the location of Apache log files), APACHE_PID_FILE (the Apache process ID), APACHE_RUN_USERS (the user that run Apache, by defaultwww-data), etc.

You can open and modify this file in a text editor of your choice. This is nice, but far from simple and requires a server restart. This is something which helps you when hardening security on a live deployed setup.

There are dynamic approaches, but you can do some research for that yourself :) Skipped that rabbit hole for now …

Handling secrets completely detached is another possibility. This is surely an overkill for most cases, but using an Infrastructure Secret Management concept might be worth looking into, if you are working on bigger scale projects that involve multiple development teams and setups. These services also often deal with secret rotation.

HashiCorp Vault – „Vault is a tool for securely accessing secrets. A secret is anything that you want to tightly control access to, such as API keys, passwords, certificates, and more. Vault provides a unified interface to any secret, while providing tight access control and recording a detailed audit log.“

You can deploy your own vault on your own infrastructure or test out a hosted version, which is free for Open Source projects. HashiCorp Vault

You will find a bunch of Hashicorp related packages that will help you to integrate a vault into your project workflow (scmrus/php-vault-env , poc-webapp-vault).

While this is nice, you will need to cache / store credentials somewhere, as you don’t want to query the vault on every single access.

The Hashicorp Vault is not the only Infrastructure Secret Management solution. There is a nice Github Gist that lists other solutions and a nice feature matrix.

Amazon also provides a solution called AWS Secrets Manager, which makes a lot of sense, when you build and deploy on AWS already :)

Gatsby is a free and open source framework based on React that helps developers build blazing fast websites and apps.

While researching some popular static site generation tools, GatsbyJS comes up often. I have played with NuxtJS and Hugo in the past, but what I REALLY like about GatsbyJS is the plugin / modular system. You can build your website with plain-old React and CSS styles, but make your development more efficient by adding node_modules.

Also being able to import any data source with ease, using GraphQL, is amazing. And when it comes to content management, you can easily hook a headless WordPress or Drupal setup into the mix and consume their REST APIs :)

I am not switching my own website to GatsbyJS anytime soon, but its another tool in my toolbox for future project consideration !

There are many tutorials on Youtube about getting started, maybe something to consider for the next freetime testdrive ;) Enjoy …

Manet is a REST API server which allows capturing screenshots of websites using various parameters.

The Node.js server can use SlimerJS or PhantomJS as headless browser engines.

I have build similar with CasperJS, but this is far better for those that want a simple straight solution.